I was reading something that suggested that trauma "tries" to spread itself. ie that the reason why intergenerational trauma is a thing is that the traumatized part in a parent will take action to recreate that trauma in the child.

More like, "here's a story for how this could work.")

Why on earth would trauma be agenty, in that way? It sounds like too much to swallow.

If some traumas try to replicate them selves in other minds, but most don't pretty soon the world will be awash in the replicator type.

Its unclear how high the fidelity of transmission is.

Thinking through this has given me a new appreciation of what @DavidDeutschOxf calls "anti-rational memes". I think he might be on to something that they are more-or-less at the core of all our problems on earth.

More from Eli Tyre

I started by simply stating that I thought that the arguments that I had heard so far don't hold up, and seeing if anyone was interested in going into it in depth with

CritRats!

— Eli Tyre (@EpistemicHope) December 26, 2020

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with me?https://t.co/Sdm4SSfQZv

So far, a few people have engaged pretty extensively with me, for instance, scheduling video calls to talk about some of the stuff, or long private chats.

(Links to some of those that are public at the bottom of the thread.)

But in addition to that, there has been a much more sprawling conversation happening on twitter, involving a much larger number of people.

Having talked to a number of people, I then offered a paraphrase of the basic counter that I was hearing from people of the Crit Rat persuasion.

ELI'S PARAPHRASE OF THE CRIT RAT STORY ABOUT AGI AND AI RISK

— Eli Tyre (@EpistemicHope) January 5, 2021

There are two things that you might call "AI".

The first is non-general AI, which is a program that follows some pre-set algorithm to solve a pre-set problem. This includes modern ML.

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with

In general, I am super up for short (1 to 10 hour) adversarial collaborations.

— Eli Tyre (@EpistemicHope) December 23, 2020

If you think I'm wrong about something, and want to dig into the topic with me to find out what's up / prove me wrong, DM me.

For instance, while I heartily agree with lots of what is said in this video, I don't think that the conclusion about how to prevent (the bad kind of) human extinction, with regard to AGI, follows.

There are a number of reasons to think that AGI will be more dangerous than most people are, despite both people and AGIs being qualitatively the same sort of thing (explanatory knowledge-creating entities).

And, I maintain, that because of practical/quantitative (not fundamental/qualitative) differences, the development of AGI / TAI is very likely to destroy the world, by default.

(I'm not clear on exactly how much disagreement there is. In the video above, Deutsch says "Building an AGI with perverse emotions that lead it to immoral actions would be a crime."

More from Culture

Translucent agate bowl with ornamental grooves and coffee-and-cream marbling. Found near Qift in southern Egypt. 300 - 1,000 BC. 📷 Getty Museum https://t.co/W1HfQZIG2V

Technicolor dreambowl, found in a grave near Zadar on Croatia's Dalmatian Coast. Made by melding and winding thin bars of glass, each adulterated with different minerals to get different colors. 1st century AD. 📷 Zadar Museum of Ancient Glass https://t.co/H9VfNrXKQK

100,000-year-old abalone shells used to mix red ocher, marrow, charcoal, and water into a colorful paste. Possibly the oldest artist's palettes ever discovered. Blombos Cave, South Africa. 📷https://t.co/0fMeYlOsXG

Reed basket bowl with shell and feather ornaments. Possibly from the Southern Pomo or Lake Miwok cultures. Found in Santa Barbara, CA, circa 1770. 📷 British Museum https://t.co/F4Ix0mXAu6

Wooden bowl with concentric circles and rounded rim, most likely made of umbrella thorn acacia (Vachellia/Acacia tortilis). Qumran. 1st Century BCE. 📷 https://t.co/XZCw67Ho03

You May Also Like

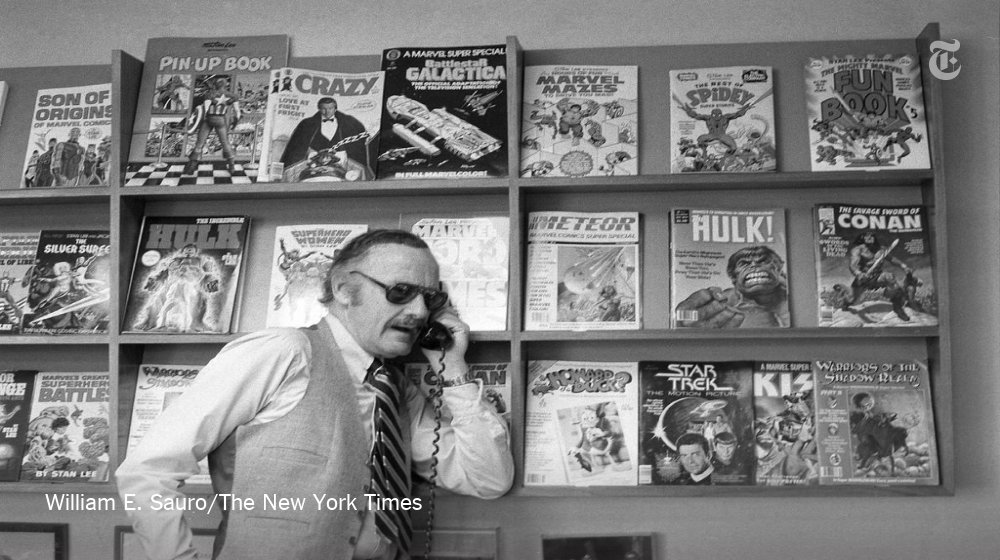

Stan Lee, who died Monday at 95, was born in Manhattan and graduated from DeWitt Clinton High School in the Bronx. His pulp-fiction heroes have come to define much of popular culture in the early 21st century.

Tying Marvel’s stable of pulp-fiction heroes to a real place — New York — served a counterbalance to the sometimes gravity-challenged action and the improbability of the stories. That was just what Stan Lee wanted. https://t.co/rDosqzpP8i

The New York universe hooked readers. And the artists drew what they were familiar with, which made the Marvel universe authentic-looking, down to the water towers atop many of the buildings. https://t.co/rDosqzpP8i

The Avengers Mansion was a Beaux-Arts palace. Fans know it as 890 Fifth Avenue. The Frick Collection, which now occupies the place, uses the address of the front door: 1 East 70th Street.

Unfortunately the "This work includes the identification of viral sequences in bat samples, and has resulted in the isolation of three bat SARS-related coronaviruses that are now used as reagents to test therapeutics and vaccines." were BEFORE the

chimeric infectious clone grants were there.https://t.co/DAArwFkz6v is in 2017, Rs4231.

https://t.co/UgXygDjYbW is in 2016, RsSHC014 and RsWIV16.

https://t.co/krO69CsJ94 is in 2013, RsWIV1. notice that this is before the beginning of the project

starting in 2016. Also remember that they told about only 3 isolates/live viruses. RsSHC014 is a live infectious clone that is just as alive as those other "Isolates".

P.D. somehow is able to use funds that he have yet recieved yet, and send results and sequences from late 2019 back in time into 2015,2013 and 2016!

https://t.co/4wC7k1Lh54 Ref 3: Why ALL your pangolin samples were PCR negative? to avoid deep sequencing and accidentally reveal Paguma Larvata and Oryctolagus Cuniculus?

BREAKING: President Donald Trump has submitted his answers to questions from special counsel Robert Mueller

— Ryan Saavedra (@RealSaavedra) November 20, 2018

Mueller's officially end his investigation all on his own and he's gonna say he found no evidence of Trump campaign/Russian collusion during the 2016 election.

Democrats & DNC Media are going to LITERALLY have nothing coherent to say in response to that.

Mueller's team was 100% partisan.

That's why it's brilliant. NOBODY will be able to claim this team of partisan Democrats didn't go the EXTRA 20 MILES looking for ANY evidence they could find of Trump campaign/Russian collusion during the 2016 election

They looked high.

They looked low.

They looked underneath every rock, behind every tree, into every bush.

And they found...NOTHING.

Those saying Mueller will file obstruction charges against Trump: laughable.

What documents did Trump tell the Mueller team it couldn't have? What witnesses were withheld and never interviewed?

THERE WEREN'T ANY.

Mueller got full 100% cooperation as the record will show.