Ankitsrihbti's Categories

Ankitsrihbti's Authors

Latest Saves

Top 10 Data Science Projects with Python

✔️ 10 Datasets

✔️ 10 Projects with solution

👇🧵

1️⃣ Project: Detecting Spam

✔️ Big email dataset

✔️ 35.000+ spam and ham messages

✔️ Learn how to filter

https://t.co/wvNSeFSbmr

Solution 👇🧵

1️⃣ Solution: Detecting Spam

✔️ How to build a spam filter

✔️ Using Scikit-learn

✔️ Naive-Bayes and

2️⃣ Project: Music Recommendation

✔️ Million Song Dataset

✔️ Metadata for a million songs

https://t.co/QgdSdIYnVV

Solution 👇🧵

2️⃣ Solution: Music Recommendation

✔️ Using Tableau

✔️ Collaborative-filtering engine

✔️ Similar to YouTube

✔️ 10 Datasets

✔️ 10 Projects with solution

👇🧵

1️⃣ Project: Detecting Spam

✔️ Big email dataset

✔️ 35.000+ spam and ham messages

✔️ Learn how to filter

https://t.co/wvNSeFSbmr

Solution 👇🧵

1️⃣ Solution: Detecting Spam

✔️ How to build a spam filter

✔️ Using Scikit-learn

✔️ Naive-Bayes and

2️⃣ Project: Music Recommendation

✔️ Million Song Dataset

✔️ Metadata for a million songs

https://t.co/QgdSdIYnVV

Solution 👇🧵

2️⃣ Solution: Music Recommendation

✔️ Using Tableau

✔️ Collaborative-filtering engine

✔️ Similar to YouTube

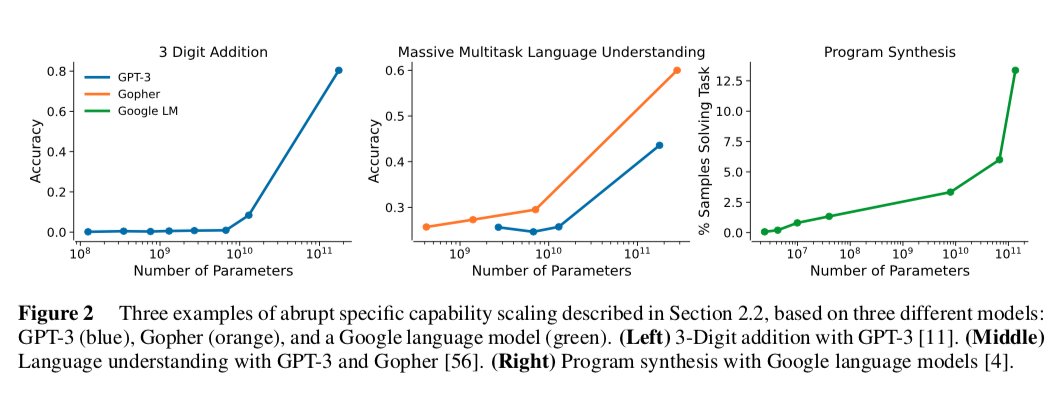

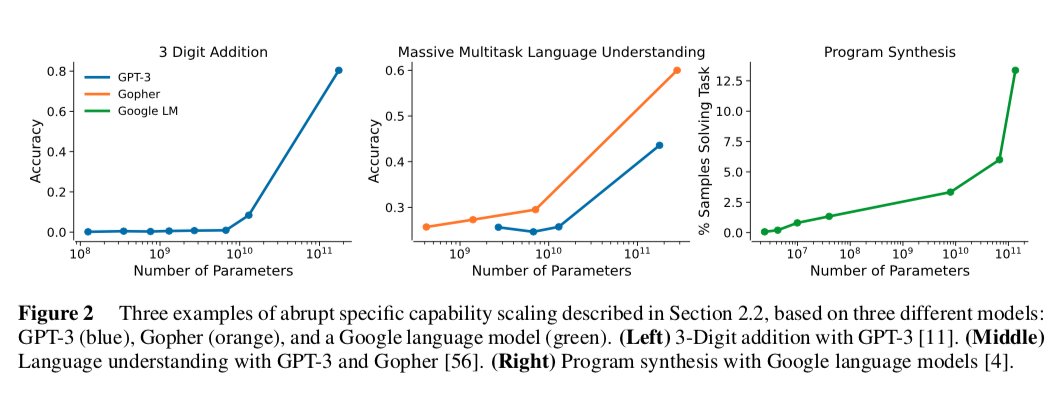

Thread on @AnthropicAI's cool new paper on how large models are both predictable (scaling laws) and surprising (capability jumps).

1. That there’s a capability jump in 3-digit addition for GPT3 (left) is unsurprising. Good challenge to better predict when such jump will occur.

2. The MMLU capability jump (center) is very different b/c it’s many diverse knowledge questions with no simple algorithm like addition.

This jump is surprising and I’d like to understand better why it happens at all.

3. Program Synthesis jump (right) feels like it should be in between 1 and 2. Less diversity than 2 and we can also imagine models grokking certain concepts in programming leading to a jump.

I’d love to see more work on this topic of predictability and surprise and how they relate to forecasting alignment/risk.

Related work:

1. @gwern's list of capability jumps and classic article on

2. @JacobSteinhardt's insightful blog series. https://t.co/0ckLgOgBiV

3. Lukas Finnveden's post on GPT-n extrapolation /scaling on different task

1. That there’s a capability jump in 3-digit addition for GPT3 (left) is unsurprising. Good challenge to better predict when such jump will occur.

2. The MMLU capability jump (center) is very different b/c it’s many diverse knowledge questions with no simple algorithm like addition.

This jump is surprising and I’d like to understand better why it happens at all.

3. Program Synthesis jump (right) feels like it should be in between 1 and 2. Less diversity than 2 and we can also imagine models grokking certain concepts in programming leading to a jump.

I’d love to see more work on this topic of predictability and surprise and how they relate to forecasting alignment/risk.

Related work:

1. @gwern's list of capability jumps and classic article on

2. @JacobSteinhardt's insightful blog series. https://t.co/0ckLgOgBiV

3. Lukas Finnveden's post on GPT-n extrapolation /scaling on different task

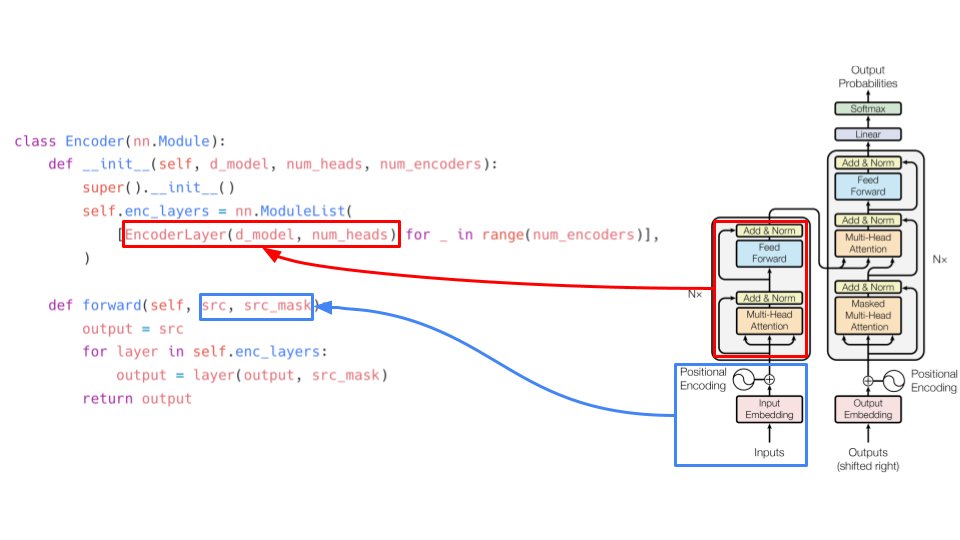

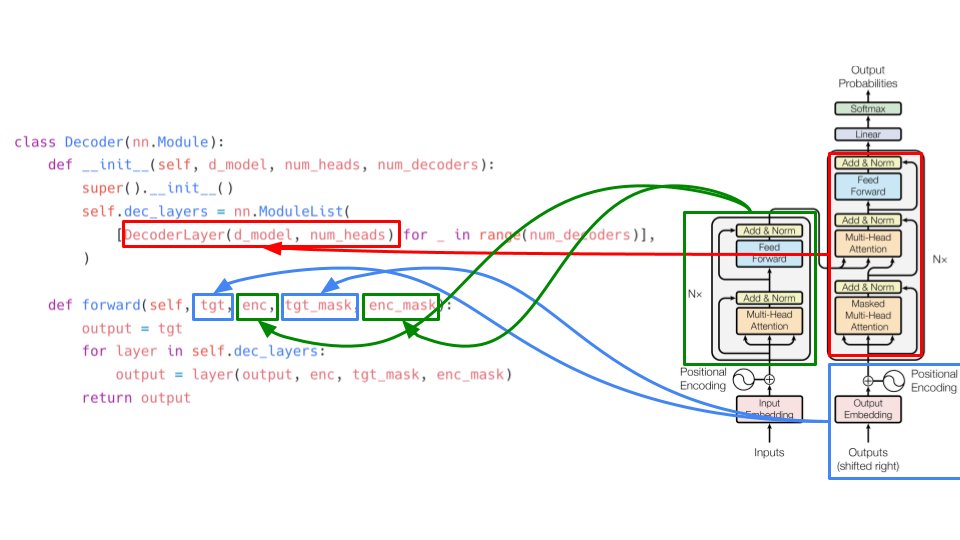

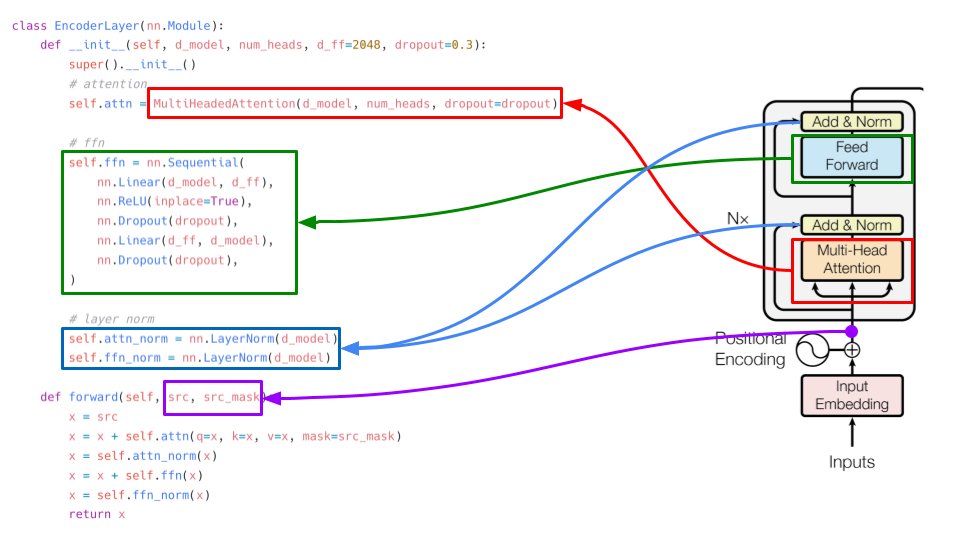

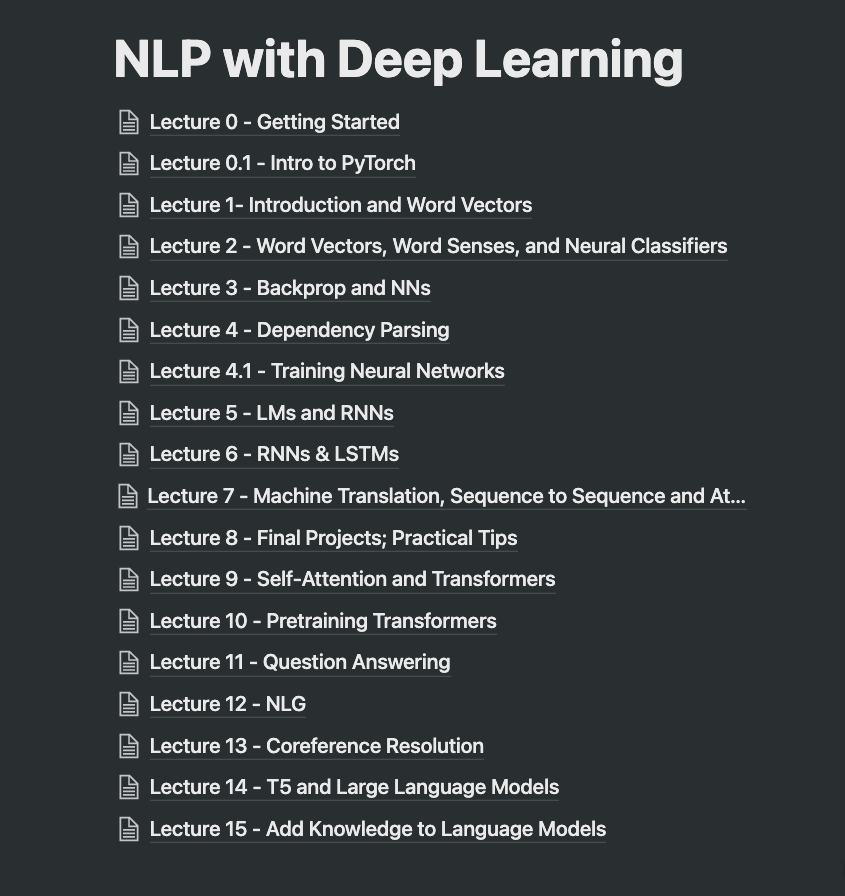

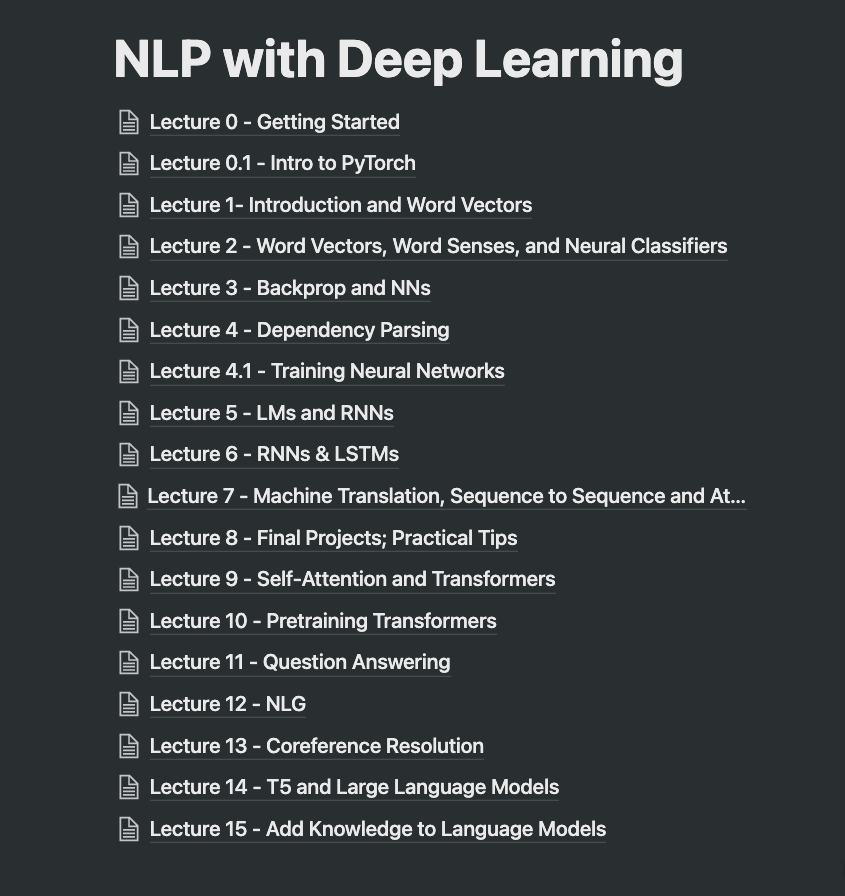

The past month I've been writing detailed notes for the first 15 lectures of Stanford's NLP with Deep Learning. Notes contain code, equations, practical tips, references, etc.

As I tidy the notes, I need to figure out how to best publish them. Here are the topics covered so far:

I know there are a lot of you interested in these from what I gathered 1 month ago. I want to make sure they are high quality before publishing, so I will spend some time working on that. Stay

Below is the course I've been auditing. My advice is you take it slow, there are some advanced concepts in the lectures. It took me 1 month (~3 hrs a day) to take rough notes for the first 15 lectures. Note that this is one semester of

I'm super excited about this project because my plan is to make the content more accessible so that a beginner can consume it more easily. It's tiring but I will keep at it because I know many of you will enjoy and find them useful. More announcements coming soon!

NLP is evolving so fast, so one idea with these notes is to create a live document that could be easily maintained by the community. Something like what we did before with NLP Overview: https://t.co/Y8Z1Svjn24

Let me know if you have any thoughts on this?

As I tidy the notes, I need to figure out how to best publish them. Here are the topics covered so far:

I know there are a lot of you interested in these from what I gathered 1 month ago. I want to make sure they are high quality before publishing, so I will spend some time working on that. Stay

I've been writing notes for the latest Deep Learning for NLP course by Stanford.

— elvis (@omarsar0) January 14, 2022

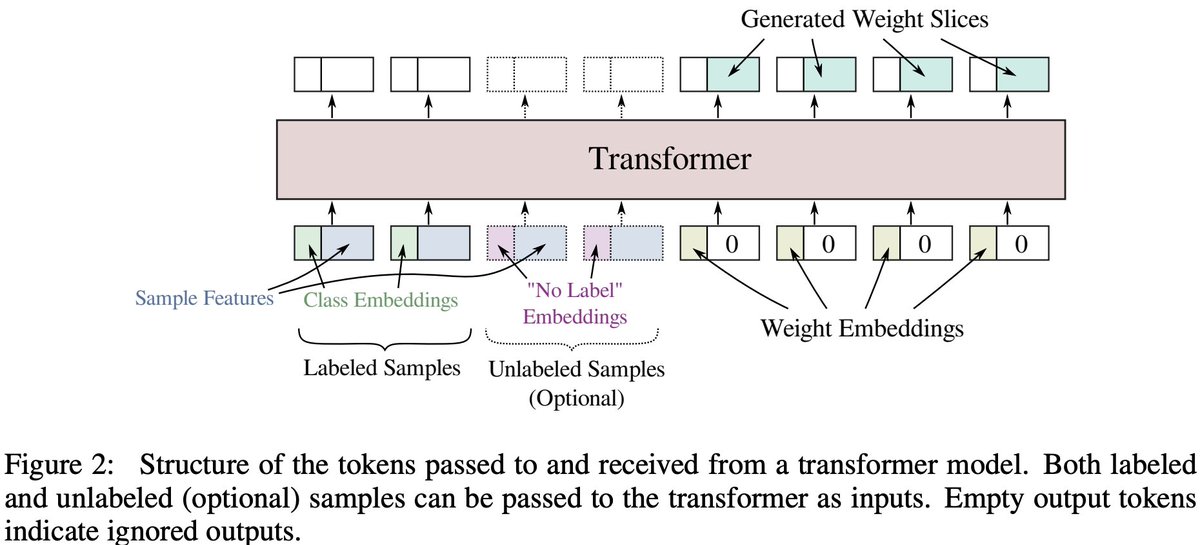

For fun, I also started to add my own code snippets into the notes. I think this is a more efficient way to study: theory + code.

Plan to share these notes soon. Stay tuned! pic.twitter.com/hWzZDORbl6

Below is the course I've been auditing. My advice is you take it slow, there are some advanced concepts in the lectures. It took me 1 month (~3 hrs a day) to take rough notes for the first 15 lectures. Note that this is one semester of

I'm super excited about this project because my plan is to make the content more accessible so that a beginner can consume it more easily. It's tiring but I will keep at it because I know many of you will enjoy and find them useful. More announcements coming soon!

NLP is evolving so fast, so one idea with these notes is to create a live document that could be easily maintained by the community. Something like what we did before with NLP Overview: https://t.co/Y8Z1Svjn24

Let me know if you have any thoughts on this?

I recently switched what I spend the majority of my professional life doing: history -> software engineering. I'm currently working as an ML Engineer @zenml_io and really enjoying this new world of #MLOps, filled as it is with challenges and opportunities.

I wanted to get some context for the wider work of a data scientist to help me appreciate the problem we are trying to address @zenml_io, so looked around for a juicy machine learning problem to work on as a longer project.

I was also encouraged by @jeremyphoward's advice to "build one project and make it great" (https://t.co/Doo88EUhkN). This approach seems like it has really paid off for those who've studied the @fastdotai course and I wanted to really go deep on something myself.

Following some previous success working with @adyantalamadhya and another mentor via @SharpestMindsAI on a previous project, I settled on computer vision and was lucky to find @ai_fast_track to mentor me through the work. (We meet a couple of times per week).

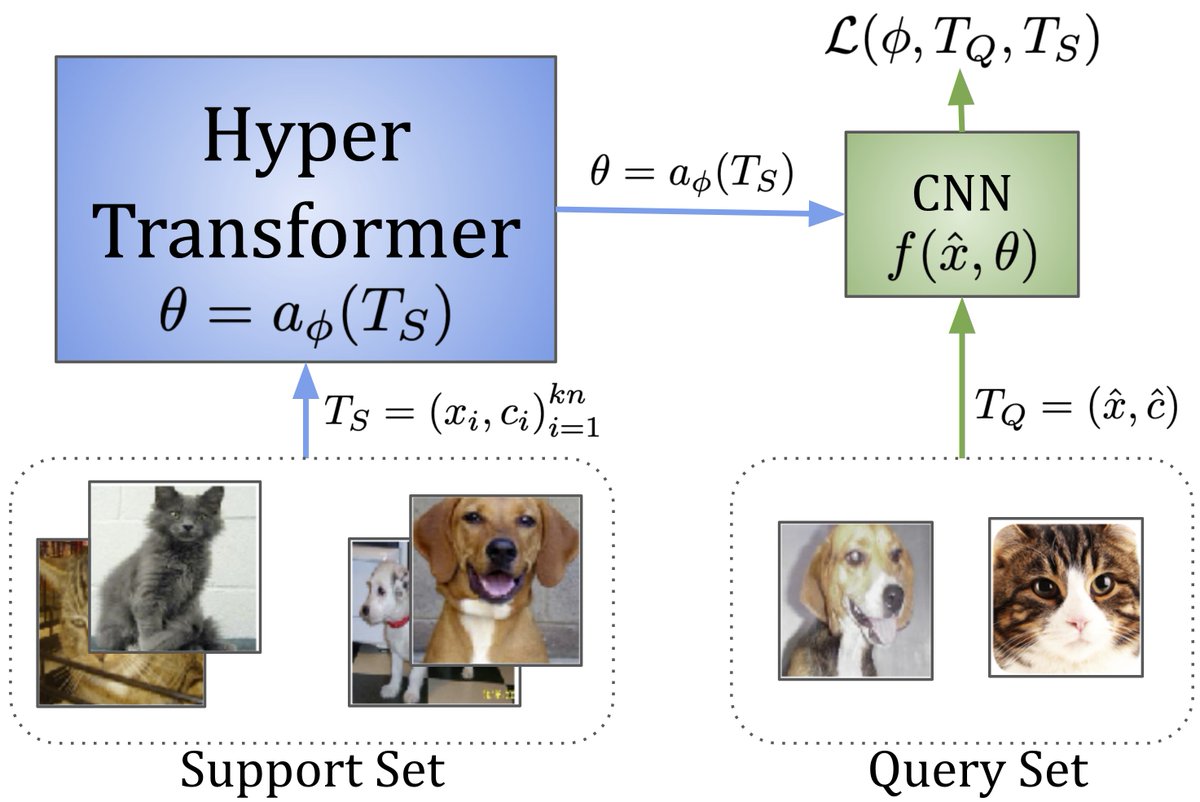

In the last 6 weeks, I've made what feels like good progress on the problem. This image offers an overview of the pieces I've been working on, to the point where the 'solution' to my original problem feels on the verge of being practically within reach.

I wanted to get some context for the wider work of a data scientist to help me appreciate the problem we are trying to address @zenml_io, so looked around for a juicy machine learning problem to work on as a longer project.

I was also encouraged by @jeremyphoward's advice to "build one project and make it great" (https://t.co/Doo88EUhkN). This approach seems like it has really paid off for those who've studied the @fastdotai course and I wanted to really go deep on something myself.

Following some previous success working with @adyantalamadhya and another mentor via @SharpestMindsAI on a previous project, I settled on computer vision and was lucky to find @ai_fast_track to mentor me through the work. (We meet a couple of times per week).

In the last 6 weeks, I've made what feels like good progress on the problem. This image offers an overview of the pieces I've been working on, to the point where the 'solution' to my original problem feels on the verge of being practically within reach.

Want to learn Django?

Check out these 8 free resources.

see 👇🧵

Get started with Django

Covers

- Installing and creating first project

- Working with templates

- Authentication frameworks

...and much more

More resources

Django Admin Cookbook

How to do things with Django admin

- Create two admin sites

- Bulk and custom actions

- Working with permissions

...and much more

https://t.co/NM0Nby5NwB

More 👇🧵

Django ORM cookbook

How to do things using Django ORM

- How to do OR/AND queries in ORM

- CRUD with ORM

- Database modelling

...and much more

https://t.co/tYjdbiYpWU

More 👇🧵

Building APIs with Django and Django Rest Framework

Learn about

- Simple API with pure Django

- Serializing and Deserializing data

- Access control

...and much more

https://t.co/gQTDxiG3wX

More 👇🧵

Check out these 8 free resources.

see 👇🧵

Get started with Django

Covers

- Installing and creating first project

- Working with templates

- Authentication frameworks

...and much more

More resources

Django Admin Cookbook

How to do things with Django admin

- Create two admin sites

- Bulk and custom actions

- Working with permissions

...and much more

https://t.co/NM0Nby5NwB

More 👇🧵

Django ORM cookbook

How to do things using Django ORM

- How to do OR/AND queries in ORM

- CRUD with ORM

- Database modelling

...and much more

https://t.co/tYjdbiYpWU

More 👇🧵

Building APIs with Django and Django Rest Framework

Learn about

- Simple API with pure Django

- Serializing and Deserializing data

- Access control

...and much more

https://t.co/gQTDxiG3wX

More 👇🧵

Early last year, I wanted to learn about Machine Learning Operations(MLOps).

MLOps refers to the whole processes involved in building and deploying machine learning models reliably.

A thread on the importance of MLOps and resources that I used 🧵

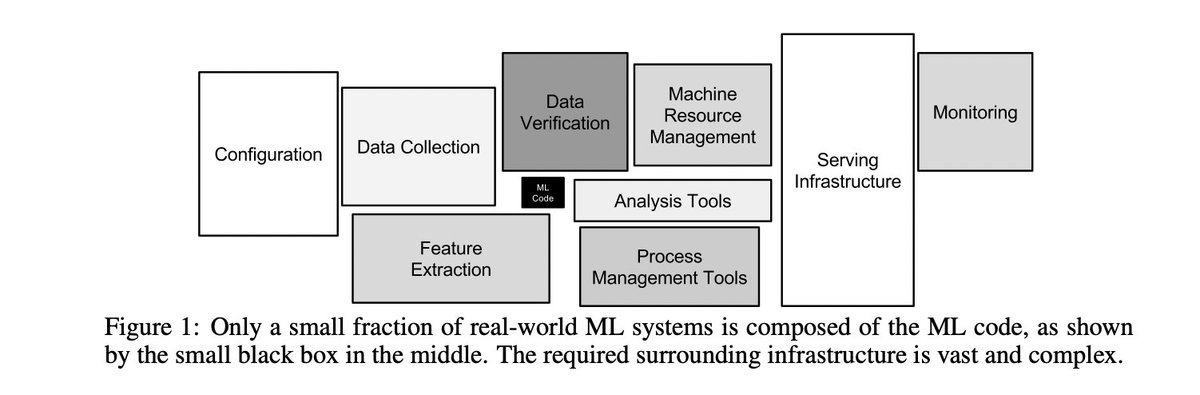

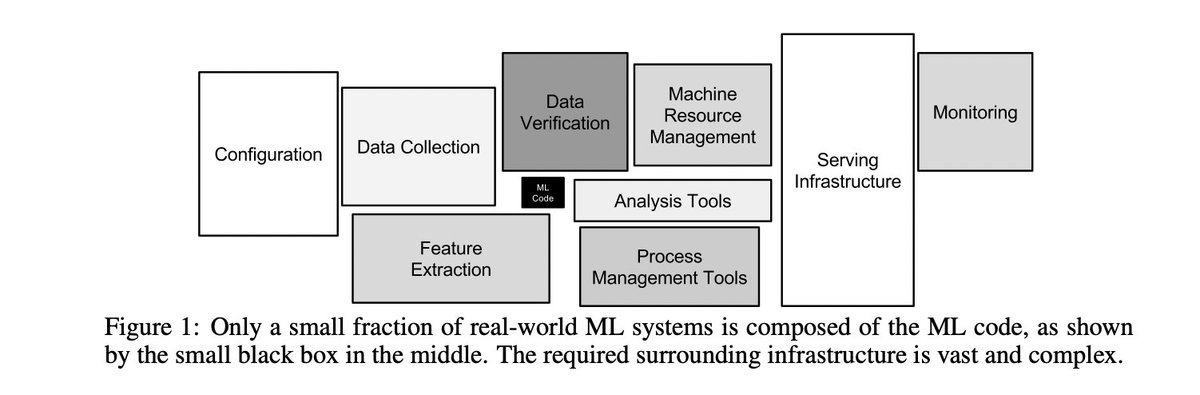

As you may have heard, models are a tiny part of any typical ML-powered application.

There is nothing that stresses that as this picture:

Source: Hidden Technical Debt in Machine Learning Systems, https://t.co/JDyAr1s3kc

There are lots of critical processes that are involved in MLOps such as:

- Data processes: collection, labeling, exploration, preprocessing

- Modeling processes: building, training, evaluation, testing

- Production processes - Serving, monitoring, and maintaining models

MLOps is a new topic for almost anyone. Maintaining models for a prolonged period of time is difficult.

Models are very prone to change. They drift over time. The world (that sources the data) changes, and so data change too.

MLOps is a huge topic. All I wanted was to have a reasonable understanding of it.

Here are 3 resources that I used:

- Machine Learning Engineering book by @burkov

- MLOps Specialization by @DeepLearningAI_

- Introducing MLOps book Oreilly

MLOps refers to the whole processes involved in building and deploying machine learning models reliably.

A thread on the importance of MLOps and resources that I used 🧵

As you may have heard, models are a tiny part of any typical ML-powered application.

There is nothing that stresses that as this picture:

Source: Hidden Technical Debt in Machine Learning Systems, https://t.co/JDyAr1s3kc

There are lots of critical processes that are involved in MLOps such as:

- Data processes: collection, labeling, exploration, preprocessing

- Modeling processes: building, training, evaluation, testing

- Production processes - Serving, monitoring, and maintaining models

MLOps is a new topic for almost anyone. Maintaining models for a prolonged period of time is difficult.

Models are very prone to change. They drift over time. The world (that sources the data) changes, and so data change too.

MLOps is a huge topic. All I wanted was to have a reasonable understanding of it.

Here are 3 resources that I used:

- Machine Learning Engineering book by @burkov

- MLOps Specialization by @DeepLearningAI_

- Introducing MLOps book Oreilly