Ankitsrihbti's Categories

Ankitsrihbti's Authors

Latest Saves

Check out this thread for short reviews of some interesting Machine Learning and Computer Vision papers. I explain the basic ideas and main takeaways of each paper in a Twitter thread.

👇 I'm adding new reviews all the time! 👇

AlexNet - the paper that started the deep learning revolution in Computer Vision!

It's finally time for some paper review! \U0001f4dc\U0001f50d\U0001f9d0

— Vladimir Haltakov (@haltakov) September 28, 2020

I promised the other day to start posting threads with summaries of papers that had a big impact on the field of ML and CV.

Here is the first one - the AlexNet paper! pic.twitter.com/QNLPIMZSIa

DenseNet - reducing the size and complexity of CNNs by adding dense connections between layers.

ML paper review time - DenseNet! \U0001f578\ufe0f

— Vladimir Haltakov (@haltakov) October 15, 2020

This paper won the Best Paper Award at the 2017 Conference on Computer Vision and Pattern Recognition (CVPR) - the best conference for computer vision problems.

It introduces a new CNN architecture where the layers are densely connected. pic.twitter.com/DuHytaoXia

Playing for data - generating synthetic GT from a video game (GTA V) and using it to improving semantic segmentation models.

Time for another ML paper review - generating synthetic ground truth data from video games! \U0001f3ae

— Vladimir Haltakov (@haltakov) October 5, 2020

I love this paper, because it pushes the boundaries of creating realistic synthetic ground truth data and shows that you can use it for training and improve your model.

Details \U0001f447 pic.twitter.com/fBgORYG8Lz

Transformers for image recognition - a new paper with the potential to replace convolutions with a transformer.

Another paper review, but a little different this time... \U0001f937\u200d\u2642\ufe0f

— Vladimir Haltakov (@haltakov) October 5, 2020

The paper is not published yet, but is submitted for review at ICLR 2021. It is getting a lot of attention from the CV/ML community, though, and many speculate that it is the end of CNNs... \U0001f447https://t.co/bh6wUxYfxu pic.twitter.com/dZGBYB8A5U

In which we replace Transformers' self-attention with FFT and it works nearly as well but faster/cheaper.

https://t.co/GiUvHkB3SK

By James Lee-Thorpe, Joshua Ainslie, @santiontanon and myself, sorta

Attention clearly works - but why? What's essential in it and what's secondary? What needs to be adaptive/learned and what can be precomputed?

The paper asks these questions, with some surprising insights.

These questions and insights echo other very recent findings like @ytay017's Pretrained CNNs for NLP

https://t.co/k0jOuYMxzz and MLP-Mixer for Vision from @neilhoulsby and co. (Like them, we also found combos of MLP to be promising).

↓

📘 Introducing MLOps

An excellent primer to MLOps and how to scale machine learning in the enterprise.

https://t.co/GCnbZZaQEI

🎓 Machine Learning Engineering for Production (MLOps) Specialization

A new specialization by https://t.co/mEjqoGrnTW on machine learning engineering for production (MLOPs).

https://t.co/MAaiRlRRE7

⚙️ MLOps Tooling Landscape

A great blog post by Chip Huyen summarizing all the latest technologies/tools used in MLOps.

https://t.co/hsDH8DVloH

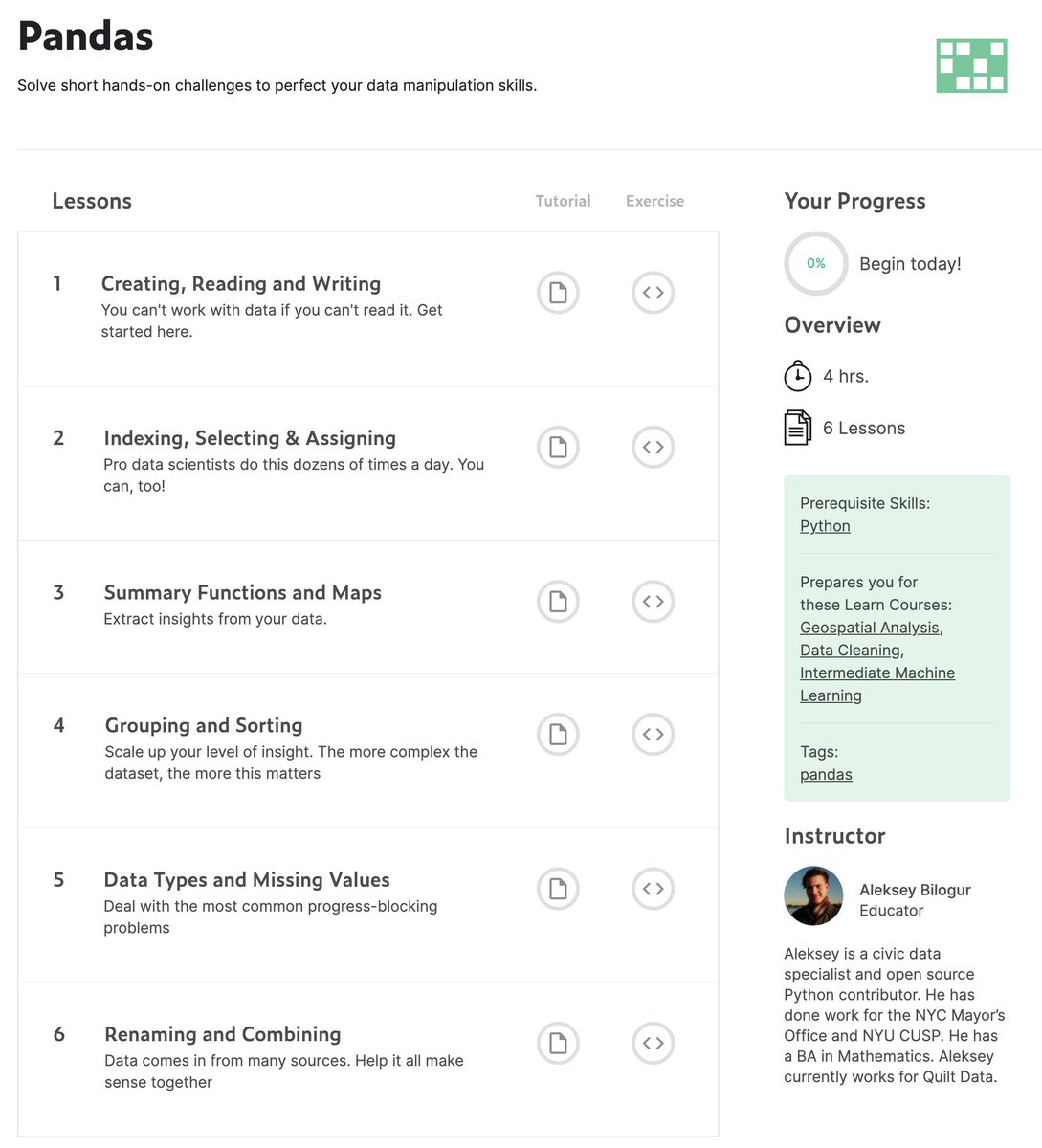

🎓 MLOps Course by Goku Mohandas

A series of lessons teaching how to apply machine learning to build production-grade products.

https://t.co/RrV3GNNsLW

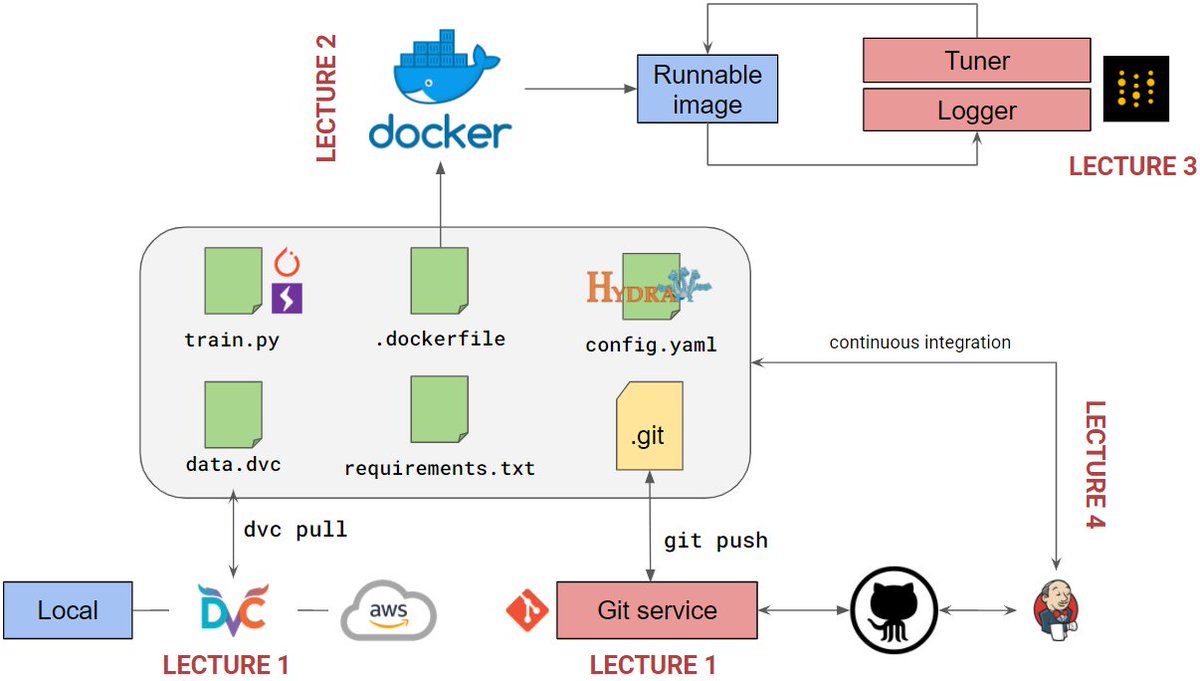

Lectures 3 and 4 are out!

With code versioning out of the way, it is time to look at data versioning (@DVCorg) and environment isolation (@Docker).

All information in a small thread. 👇 /n

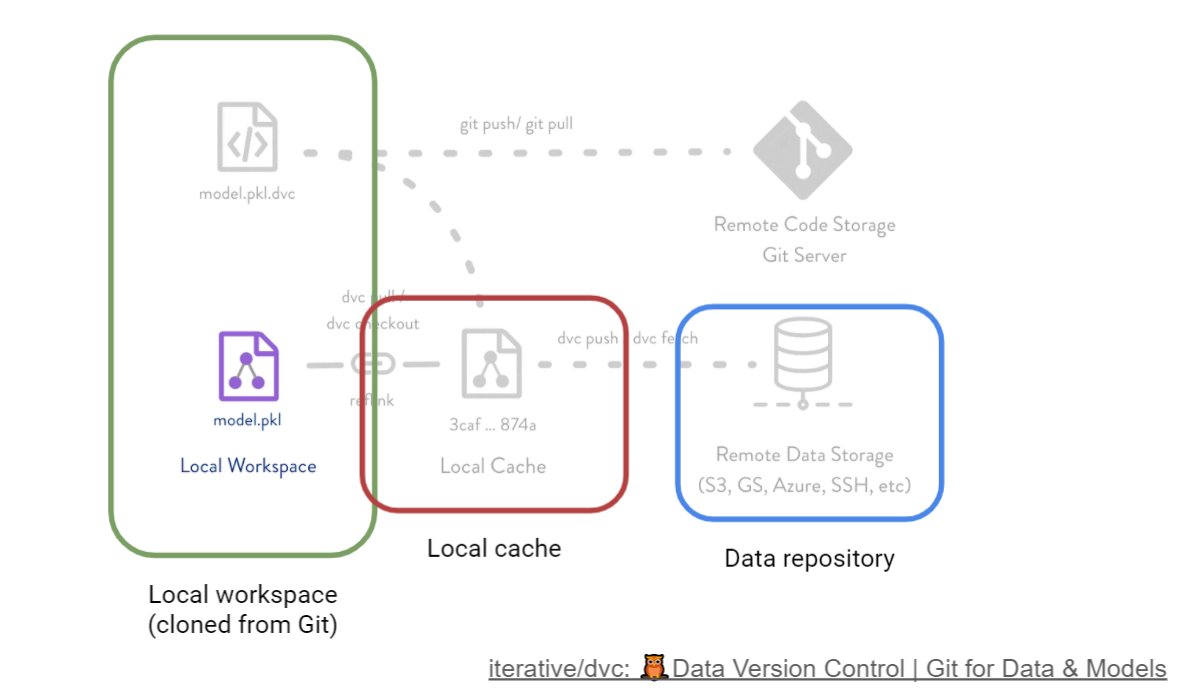

If you know Git, you (almost) know @DVCorg!

A fantastic tool to secure your data in a number of remotes, or to create "data repositories" from which to immediately get folders and artifacts.

My intro to DVC: https://t.co/2m3cXGAPN6

/n

For the course, I created a simple exercise tasking you with initializing DVC on the repository, and syncing the data locally and remotely.

To simulate an S3-like interface, we use a small https://t.co/91bFj7KSPG server and boto3.

Code: https://t.co/KDSX80aqJs

/n

Next up, it is time to "dockerize" your environment!

Docker has become an almost de-facto standard, and knowing it is practically indispensable today.

A very quick introduction, glossing over a number of details: https://t.co/XSrUZNhd3g

/n

In the corresponding exercise, you will learn about creating a working environment in Docker, packaging the entire training loop, and pushing/pulling an image from the Hub.

Code is here: