Authors Owain Evans

7 days

30 days

All time

Recent

Popular

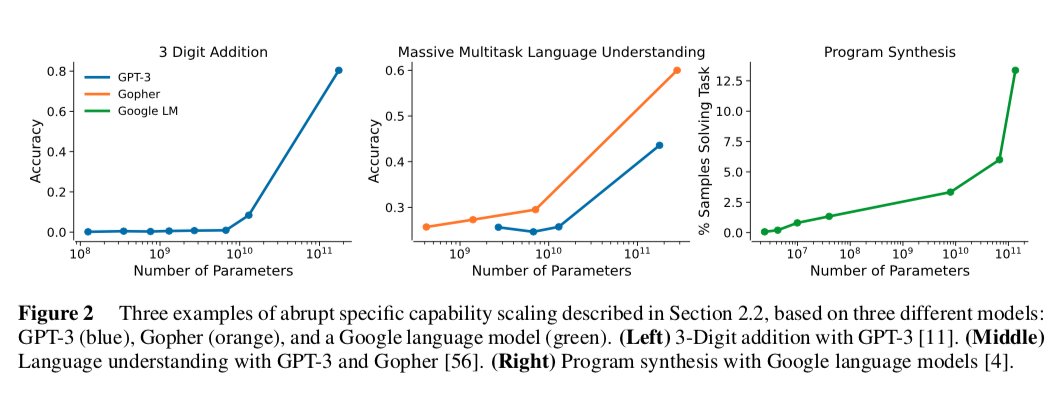

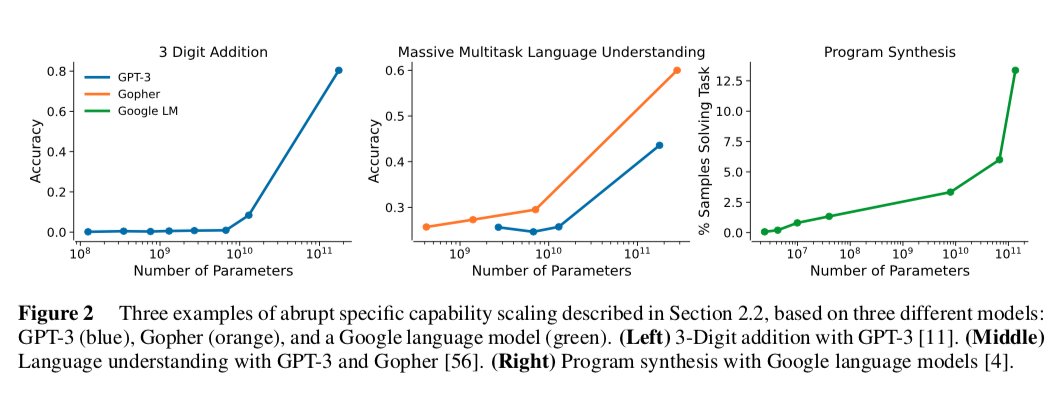

Thread on @AnthropicAI's cool new paper on how large models are both predictable (scaling laws) and surprising (capability jumps).

1. That there’s a capability jump in 3-digit addition for GPT3 (left) is unsurprising. Good challenge to better predict when such jump will occur.

2. The MMLU capability jump (center) is very different b/c it’s many diverse knowledge questions with no simple algorithm like addition.

This jump is surprising and I’d like to understand better why it happens at all.

3. Program Synthesis jump (right) feels like it should be in between 1 and 2. Less diversity than 2 and we can also imagine models grokking certain concepts in programming leading to a jump.

I’d love to see more work on this topic of predictability and surprise and how they relate to forecasting alignment/risk.

Related work:

1. @gwern's list of capability jumps and classic article on

2. @JacobSteinhardt's insightful blog series. https://t.co/0ckLgOgBiV

3. Lukas Finnveden's post on GPT-n extrapolation /scaling on different task

1. That there’s a capability jump in 3-digit addition for GPT3 (left) is unsurprising. Good challenge to better predict when such jump will occur.

2. The MMLU capability jump (center) is very different b/c it’s many diverse knowledge questions with no simple algorithm like addition.

This jump is surprising and I’d like to understand better why it happens at all.

3. Program Synthesis jump (right) feels like it should be in between 1 and 2. Less diversity than 2 and we can also imagine models grokking certain concepts in programming leading to a jump.

I’d love to see more work on this topic of predictability and surprise and how they relate to forecasting alignment/risk.

Related work:

1. @gwern's list of capability jumps and classic article on

2. @JacobSteinhardt's insightful blog series. https://t.co/0ckLgOgBiV

3. Lukas Finnveden's post on GPT-n extrapolation /scaling on different task