Last up in Privacy Tech for #enigma2021, @xchatty speaking about "IMPLEMENTING DIFFERENTIAL PRIVACY FOR THE 2020

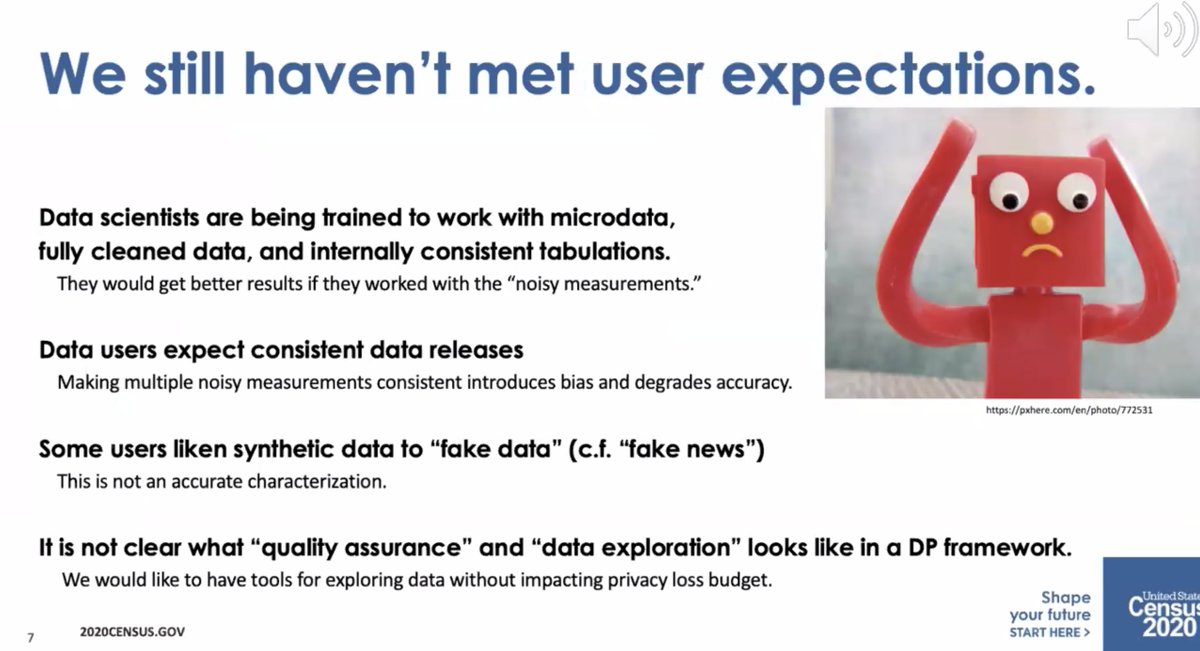

* Data users expect consistent data releases

* Some people call synthetic data "fake data" like

"fake news"

* It's not clear what "quality assurance" and "data exploration" means in a DP framework

* required to collect it by the constitution

* but required to maintain privacy by law

* differential privacy is open and we can talk about privacy loss/accuracy tradeoff

* swapping assumed limitations of the attackers (e.g. limited computational power)

Change in the meaning of "privacy" as relative -- it requires a lot of explanation and overcoming organizational barriers.

* different groups at the Census thought that meant different things

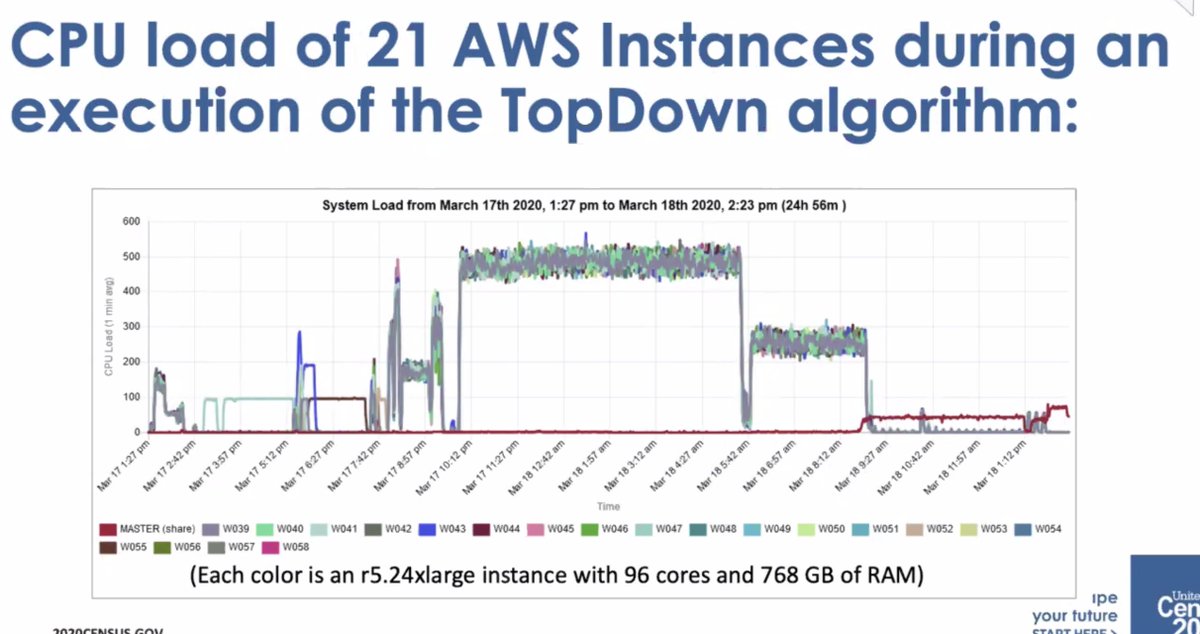

* before, states were processed as they came in. Differential privacy requires everything be computed on at once

* required a lot more computing power

* initial implementation was by Dan Kiefer, who took a sabbatical

* expanded team to with Simson and others

* 2018 end to end test

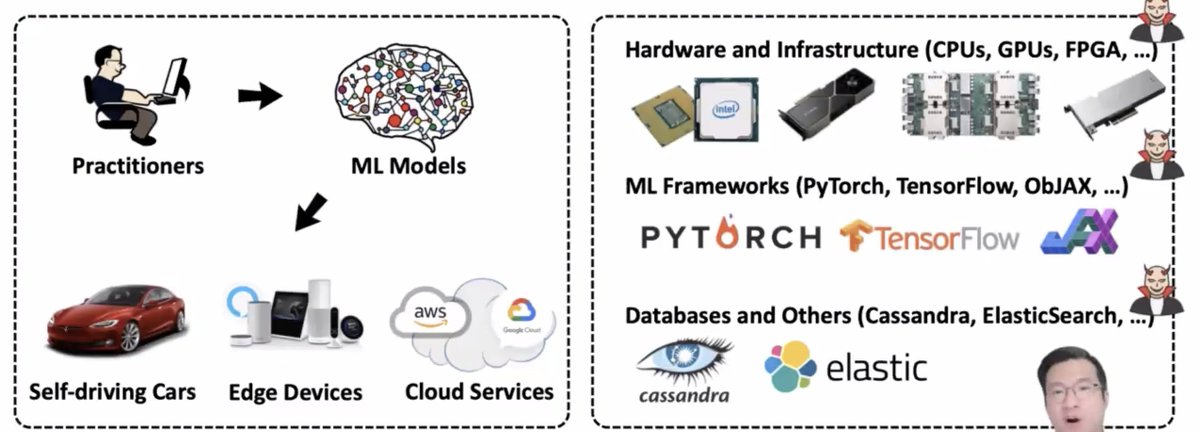

* then got to move to AWS Elastic compute... but the monitoring wasn't good enough and had to create their own dashboard to track execution

* it wasn't a small amount of compute

* ... it wasn't well-received by the data users who thought there was too much error

If you avoid that, you might add bias to the data. How to avoid that? Let some data users get access to the measurement files [I don't follow]