Just gonna leave this here. When we released the Alternative Influence Network report (@beccalew is the author), many were criticial of the humble recommendation that social media companies should review accounts as they gained popularity.

Too busy trying to spot a bot maybe? Too worried about declining data stockpiles?

It’s bumming me out.

The problem is the design of social media as a content delivery system.

The only way to talk society off the ledge is to work on smaller scales.

@mapc @blnaveen @praymurray @anjakovacs @gonzatto @hugocristo @souzaeduardo @mamaazure @fredvanamstel

— Raghav Agrawal (@impactology) January 18, 2021

More from Tech

It's all in French, but if you're up for it you can read:

• Their blog post (lacks the most interesting details): https://t.co/PHkDcOT1hy

• Their high-level legal decision: https://t.co/hwpiEvjodt

• The full notification: https://t.co/QQB7rfynha

I've read it so you needn't!

Vectaury was collecting geolocation data in order to create profiles (eg. people who often go to this or that type of shop) so as to power ad targeting. They operate through embedded SDKs and ad bidding, making them invisible to users.

The @CNIL notes that profiling based off of geolocation presents particular risks since it reveals people's movements and habits. As risky, the processing requires consent — this will be the heart of their assessment.

Interesting point: they justify the decision in part because of how many people COULD be targeted in this way (rather than how many have — though they note that too). Because it's on a phone, and many have phones, it is considered large-scale processing no matter what.

Flat Earth conference attendees explain how they have been brainwashed by YouTube and Infowarshttps://t.co/gqZwGXPOoc

— Raw Story (@RawStory) November 18, 2018

This spring at SxSW, @SusanWojcicki promised "Wikipedia snippets" on debated videos. But they didn't put them on flat earth videos, and instead @YouTube is promoting merchandising such as "NASA lies - Never Trust a Snake". 2/

A few example of flat earth videos that were promoted by YouTube #today:

https://t.co/TumQiX2tlj 3/

https://t.co/uAORIJ5BYX 4/

https://t.co/yOGZ0pLfHG 5/

Legacy site *downloads* ~630 KB CSS per theme and writing direction.

6,769 rules

9,252 selectors

16.7k declarations

3,370 unique declarations

44 media queries

36 unique colors

50 unique background colors

46 unique font sizes

39 unique z-indices

https://t.co/qyl4Bt1i5x

PWA *incrementally generates* ~30 KB CSS that handles all themes and writing directions.

735 rules

740 selectors

757 declarations

730 unique declarations

0 media queries

11 unique colors

32 unique background colors

15 unique font sizes

7 unique z-indices

https://t.co/w7oNG5KUkJ

The legacy site's CSS is what happens when hundreds of people directly write CSS over many years. Specificity wars, redundancy, a house of cards that can't be fixed. The result is extremely inefficient and error-prone styling that punishes users and developers.

The PWA's CSS is generated on-demand by a JS framework that manages styles and outputs "atomic CSS". The framework can enforce strict constraints and perform optimisations, which is why the CSS is so much smaller and safer. Style conflicts and unbounded CSS growth are avoided.

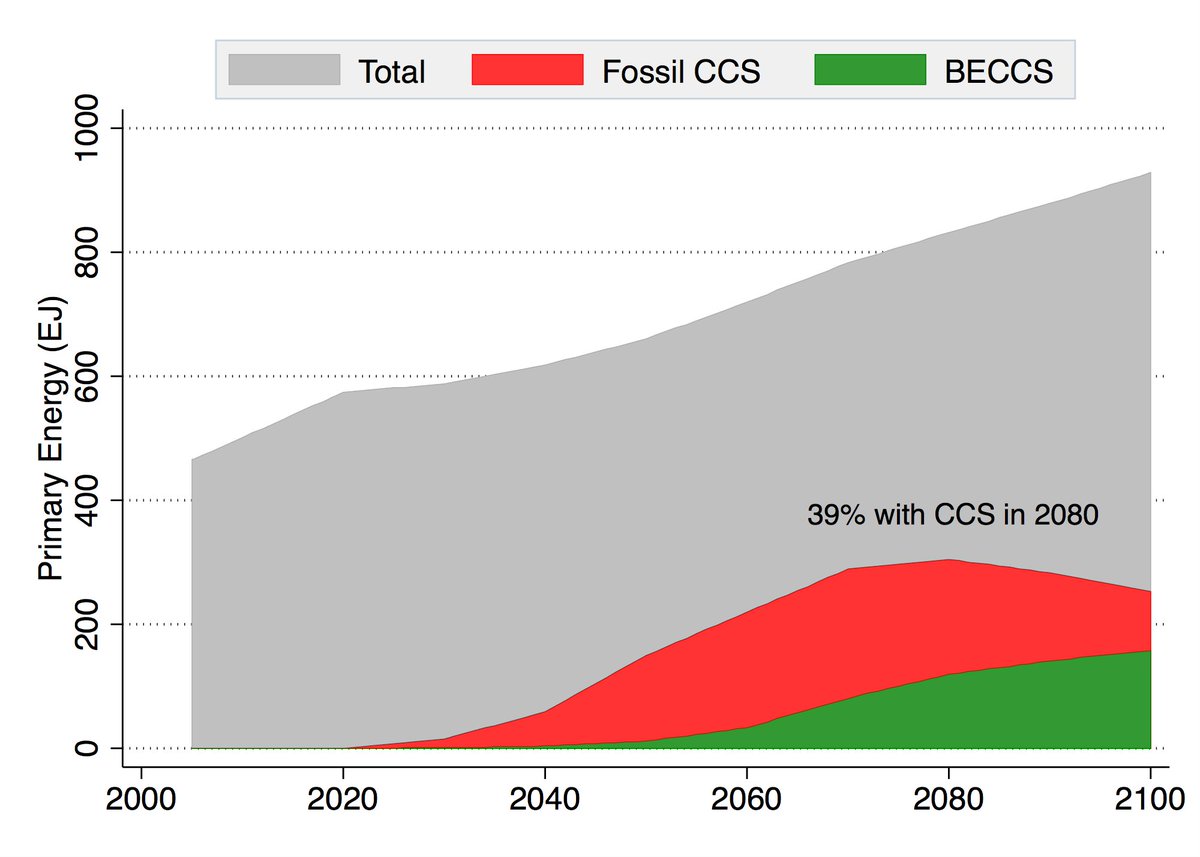

Energy system models love NETs, particularly for very rapid mitigation scenarios like 1.5C (where the alternative is zero global emissions by 2040)! More problematically, they also like tons of NETs in 2C scenarios where NETs are less essential. https://t.co/M3ACyD4cv7 2/10

There is a lot of confusion about carbon budgets and how quickly emissions need to fall to zero to meet various warming targets. To cut through some of this morass, we can use some very simple emission pathways to explore what various targets would entail. 1/11 pic.twitter.com/Kriedtf0Ec

— Zeke Hausfather (@hausfath) September 24, 2020

In model world the math is simple: very rapid mitigation is expensive today, particularly once you get outside the power sector, and technological advancement may make later NETs cheaper than near-term mitigation after a point. 3/10

This is, of course, problematic if the aim is to ensure that particular targets (such as well-below 2C) are met; betting that a "backstop" technology that does not exist today at any meaningful scale will save the day is a hell of a moral hazard. 4/10

Many models go completely overboard with CCS, seeing a future resurgence of coal and a large part of global primary energy occurring with carbon capture. For example, here is what the MESSAGE SSP2-1.9 scenario shows: 5/10

You May Also Like

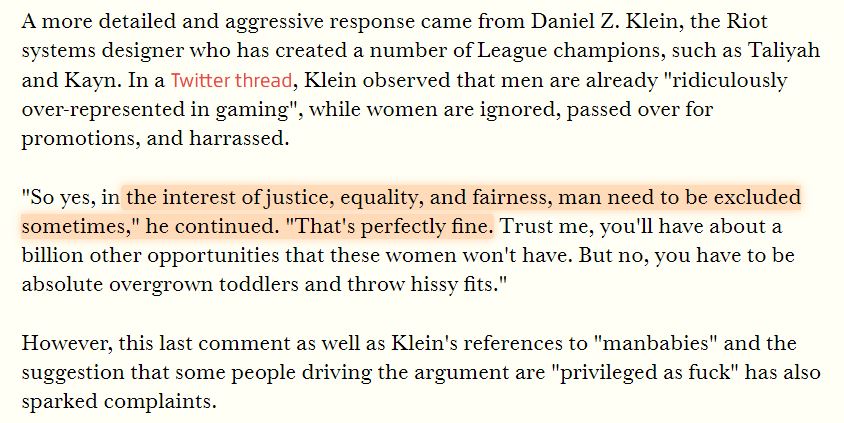

USC's Interactive Media & Games Division cancels all-star panel that included top-tier game developers who were invited to share their experiences with students. Why? Because there were no women on the

ElectronConf is a conf which chooses presenters based on blind auditions; the identity, gender, and race of the speaker is not known to the selection team. The results of that merit-based approach was an all-male panel. So they cancelled the conference.

Apple's head of diversity (a black woman) got in trouble for promoting a vision of diversity that is at odds with contemporary progressive dogma. (She left the company shortly after this

Also in the name of diversity, there is unabashed discrimination against men (especially white men) in tech, in both hiring policies and in other arenas. One such example is this, a developer workshop that specifically excluded men: https://t.co/N0SkH4hR35

1 - open trading view in your browser and select stock scanner in left corner down side .

2 - touch the percentage% gain change ( and u can see higest gainer of today)

Making thread \U0001f9f5 on trading view scanner by which you can select intraday and btst stocks .

— Vikrant (@Trading0secrets) October 22, 2021

In just few hours (Without any watchlist)

Some manual efforts u have to put on it.

Soon going to share the process with u whenever it will be ready .

"How's the josh?"guys \U0001f57a\U0001f3b7\U0001f483

3. Then, start with 6% gainer to 20% gainer and look charts of everyone in daily Timeframe . (For fno selection u can choose 1% to 4% )

4. Then manually select the stocks which are going to give all time high BO or 52 high BO or already given.

5. U can also select those stocks which are going to give range breakout or already given range BO

6 . If in 15 min chart📊 any stock sustaing near BO zone or after BO then select it on your watchlist

7 . Now next day if any stock show momentum u can take trade in it with RM

This looks very easy & simple but,

U will amazed to see it's result if you follow proper risk management.

I did 4x my capital by trading in only momentum stocks.

I will keep sharing such learning thread 🧵 for you 🙏💞🙏

Keep learning / keep sharing 🙏

@AdityaTodmal