LRT: One of the problems with Twitter moderation - and I'm not suggesting this is an innocent cause that is accidentally enabling abuse, but rather that it's a feature, from their point of view - is that the reporting categories available for us do not match up to the rules.

Because the rule's existence creates the impression that we have protections we don't.

Meanwhile, people who aren't acting in good faith can, will, and DO game the automated aspects of the system to suppress and harm their targets.

A death threat is not supposed to be allowed on here even if it's a joke. That's Twitter's premise, not mine.

A guy going "Haha get raped." is part of the plan. It's normal.

His target replying "FUCK OFF" is not. It's radical.

Things that strike the moderator as "That's just how it is on this bitch of an earth." get a pass.

And then the ultra-modern ones like "Banned from Minecraft in real life."

https://t.co/5xdHZmqLmM

Helicopter rides.

— azteclady (@HerHandsMyHands) October 3, 2020

More from 12 Foot Tall Giant Alexandra Erin

(DC POLICE) MPD CHIEF : "There was no intelligence that suggests that there would be a breach of the US Capitol. "

— Chris Cioffi (@ReporterCioffi) January 7, 2021

She's on video running and tackling him. She physically attacked him.

The 22-year-old woman caught on camera allegedly physically attacking a 14-year-old Black teen and falsely accusing him of stealing her phone was arrested in California.

— CBS This Morning (@CBSThisMorning) January 8, 2021

In an exclusive interview, Miya Ponsetto and her lawyer spoke with @GayleKing hours before she was arrested. pic.twitter.com/ezaGkcWZ8j

More from Social media

We’re counting down 13 of the best ways to Halloween on Snapchat. First up – matching Lens costumes for you and your pet.

https://t.co/J0Zn7CfM1q

Tis the season to slay some ghouls. Grab some friends and dive in to Zombie Rescue Squad from @PikPokGames. How long can you survive?

https://t.co/FC9dvafUiV

Is it even Halloween if you're not FREAKED OUT? Scare yourself silly with a Dead of Night S1 rewatch.

https://t.co/LtoE7yHgaG

Be careful! Things aren’t always what they seem. Our Lenses start off cute, but are filled with spooky surprises!

https://t.co/xq45JlYeQ7

Craving candy early? Our new stickers were made to satisfy your sweet tooth.

If you thought disinformation on Facebook was a problem during our election, just wait until you see how it is shredding the fabric of our democracy in the days after.

— Bill Russo (@BillR) November 10, 2020

Look at what has happened in just the past week.

In other words, the Social Media monopoly Facebook commands globally has gone full fascist in an attempt to preserve the corrupt and criminal hold on power by Republicans and Trump Administration.

Aiding and abetting a coup d’état.

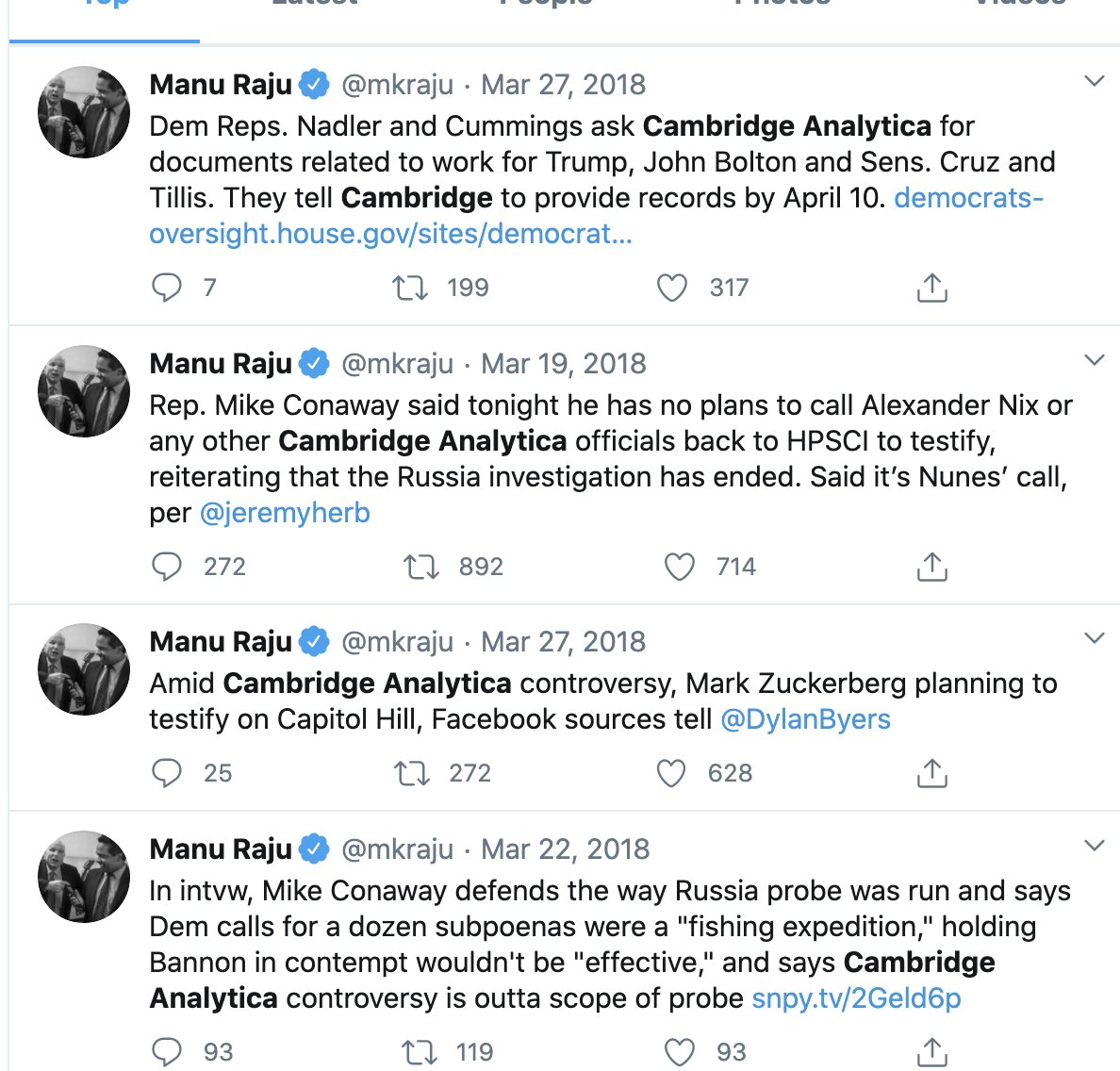

As if there weren’t enough other reasons to dismantle Facebook’s monopoly, Zuckerberg is playing his cards and revealing clearly that Cambridge Analytica election interference was not just a onetime anomaly, but is now a feature of Facebook’s business model.

Megalomaniac Marc has now revealed the true colours of Fascist Facebook.

Facebook is a weapon to manipulate the masses. A tool to carry out disinformation campaigns with impunity.

And the response of the left... is to delete their Facebook account.

As if the deletion of a Facebook account will do anything. It might send a message that your virtues are principled, your morality superior. But it enables the weapon to be continued to gaslight and manipulate the electorate.

An inherent flaw in the left’s critical thinking.

So let’s talk about that!

Donald Trump has spent the last few months trying to ban TikTok.

— Sophia Smith Galer (@sophiasgaler) October 6, 2020

But I've found videos that suggest his re-election campaign might be using a TikTok hype house to track how well pro-Trump messaging performs on there. My story and a \U0001f6a8 thread \U0001f6a8 below. https://t.co/2XWLTRKLqq

Super glad I could be of help btw :P

Anyhoo: my background = senior web dev, data analysis a specialty, worked in online marketing/advertising a while back

You’ve got this big TikTok account that’s ostensibly all volunteer, just promoting Trump’s app because they’re politically minded and all that.

Noooooope. They’re being paid.

Sophia says it’s just possible (journalist speak I assume) but I know exactly what I’m looking at and these guys, Conservative Hype House, are getting paid to drive traffic and app installs for Trump.

So how do you know that, Claire?

Welp, they’re using an ad tracking system that has codes assigned to specific affiliates or incoming marketing channels. These are always ALWAYS used to track metrics for which the affiliate is getting paid.