1. While packing, I have been thinking about where we find ourselves in the abortion debate. Much of the current debate is really only made possible by modern medicine. Medicine has been on the forefront not just of the technical revolution, but is at the heart of its mindset.

More from All

How can we use language supervision to learn better visual representations for robotics?

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

You May Also Like

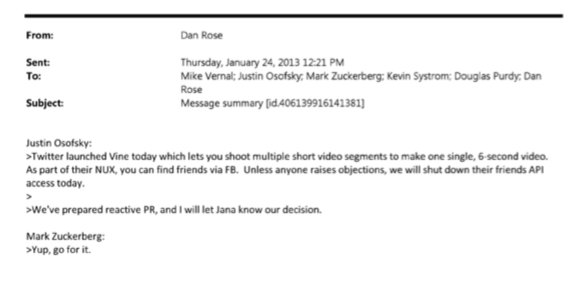

BREAKING: @CommonsCMS @DamianCollins just released previously sealed #Six4Three @Facebook documents:

Some random interesting tidbits:

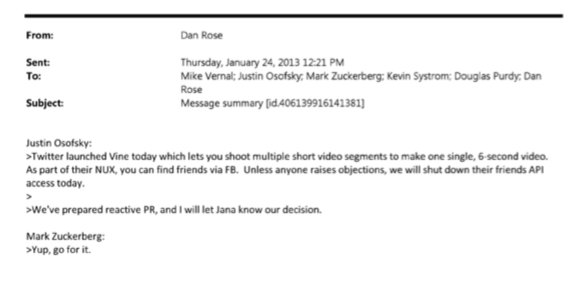

1) Zuck approves shutting down platform API access for Twitter's when Vine is released #competition

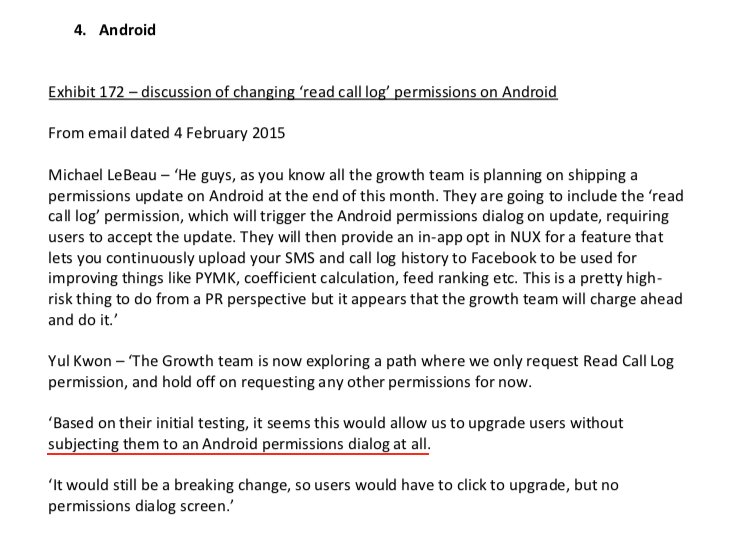

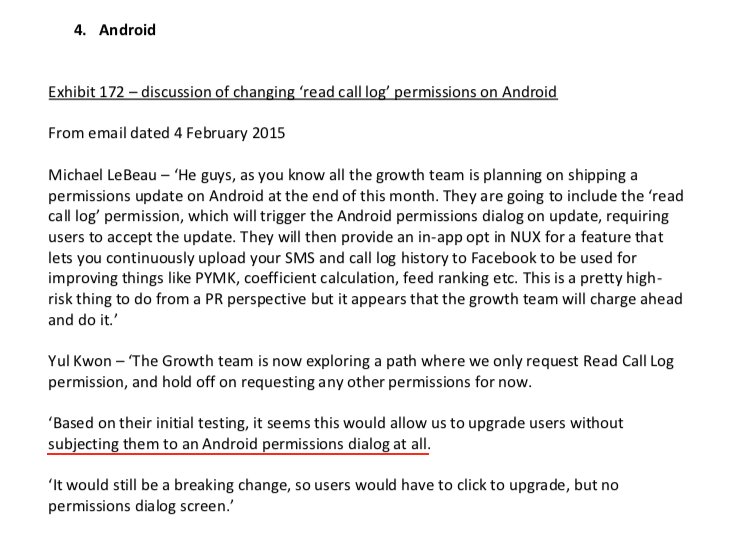

2) Facebook engineered ways to access user's call history w/o alerting users:

Team considered access to call history considered 'high PR risk' but 'growth team will charge ahead'. @Facebook created upgrade path to access data w/o subjecting users to Android permissions dialogue.

3) The above also confirms @kashhill and other's suspicion that call history was used to improve PYMK (People You May Know) suggestions and newsfeed rankings.

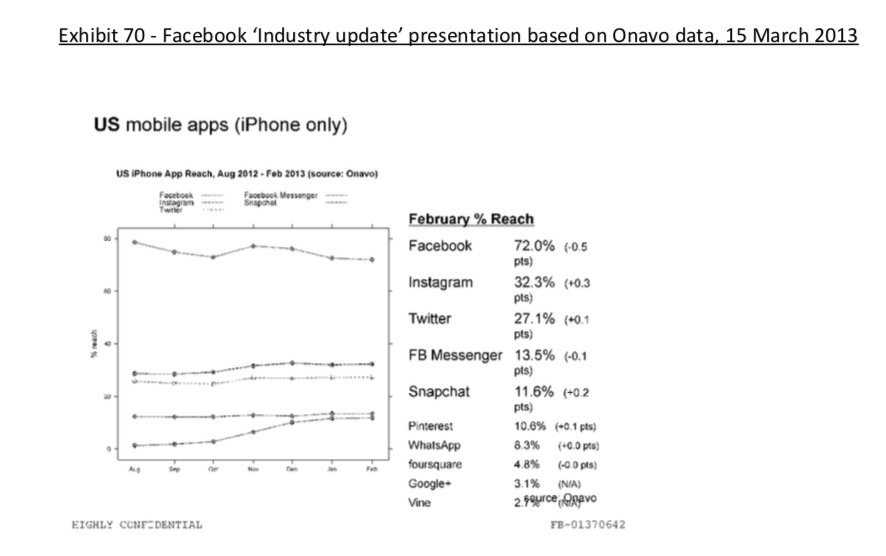

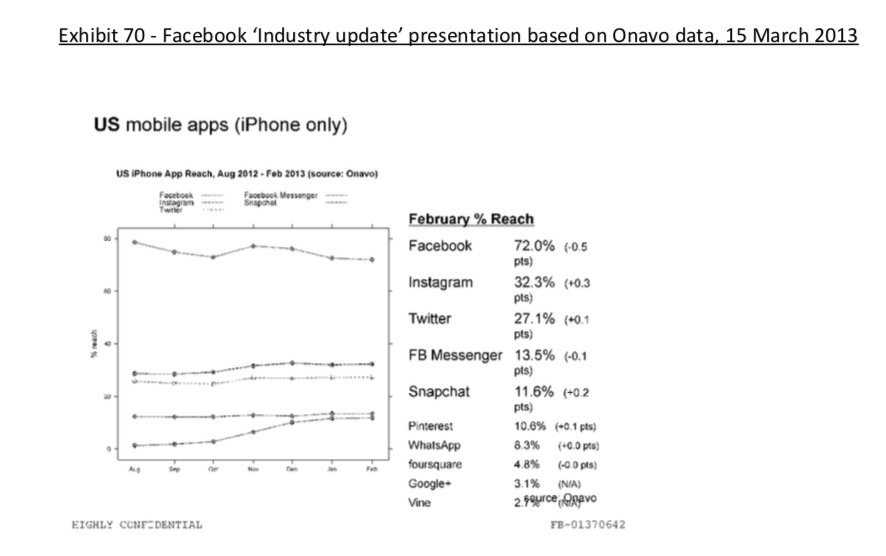

4) Docs also shed more light into @dseetharaman's story on @Facebook monitoring users' @Onavo VPN activity to determine what competitors to mimic or acquire in 2013.

https://t.co/PwiRIL3v9x

Some random interesting tidbits:

1) Zuck approves shutting down platform API access for Twitter's when Vine is released #competition

2) Facebook engineered ways to access user's call history w/o alerting users:

Team considered access to call history considered 'high PR risk' but 'growth team will charge ahead'. @Facebook created upgrade path to access data w/o subjecting users to Android permissions dialogue.

3) The above also confirms @kashhill and other's suspicion that call history was used to improve PYMK (People You May Know) suggestions and newsfeed rankings.

4) Docs also shed more light into @dseetharaman's story on @Facebook monitoring users' @Onavo VPN activity to determine what competitors to mimic or acquire in 2013.

https://t.co/PwiRIL3v9x