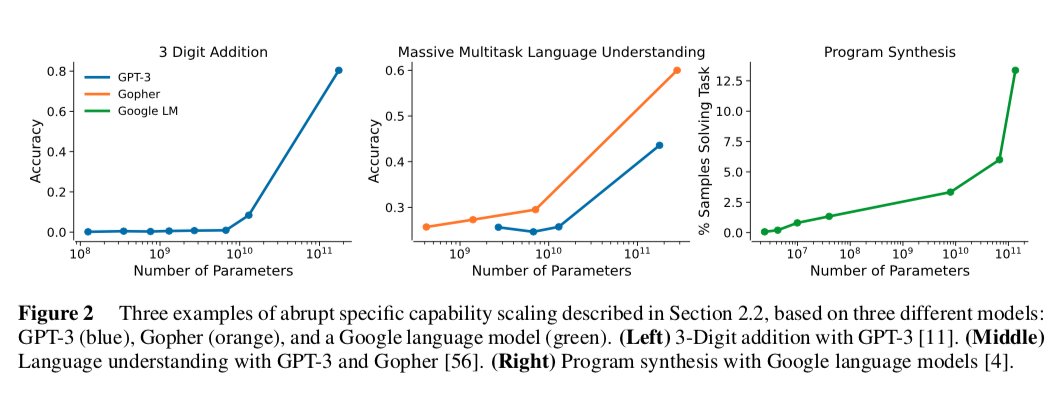

This jump is surprising and I’d like to understand better why it happens at all.

Thread on @AnthropicAI's cool new paper on how large models are both predictable (scaling laws) and surprising (capability jumps).

1. That there’s a capability jump in 3-digit addition for GPT3 (left) is unsurprising. Good challenge to better predict when such jump will occur.

This jump is surprising and I’d like to understand better why it happens at all.

Related work:

1. @gwern's list of capability jumps and classic article on scaling

https://t.co/C8qljJa13A

https://t.co/er791mhbjP

3. Lukas Finnveden's post on GPT-n extrapolation /scaling on different task shttps://www.lesswrong.com/posts/k2SNji3jXaLGhBeYP/extrapolating-gpt-n-performance