Then came three enormous waves of academic and scientific talent to the US.

I don't think people have quite internalized *how* the US became the global leader in science and technology. It's partially a story of massive global talent migration.

And it's important to get this story right if we want to maintain

Then came three enormous waves of academic and scientific talent to the US.

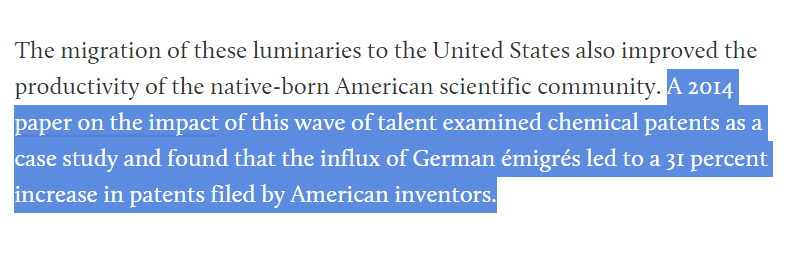

In a massive own-goal, 1930s Nazi Germany dismissed ~15% of the physicists who made up a stunning 64% (!) of their physics citations.

With the Cold War looming, the US brought over ~1,600 scientists through Operation Paperclip and the Soviets ~2,500 through Operation Osoaviakhim.

Perhaps an underrated element in the fall of the Soviet Union is how we absorbed most of their top scientific talent as faith in the regime was starting to falter.

— Caleb Watney (@calebwatney) July 6, 2020

A great 1990 NYT article on it here:https://t.co/NXBldbgoV0 pic.twitter.com/TEgytTYsJv

https://t.co/i0QMZeQaEa

Using the Nobel Prize in Physics as a rough proxy, American scientists were involved in only three of the thirty prizes awarded between 1901 to 1933.

And a huge share of these Nobel laureates have been either first- or second-generation immigrants from these three waves.

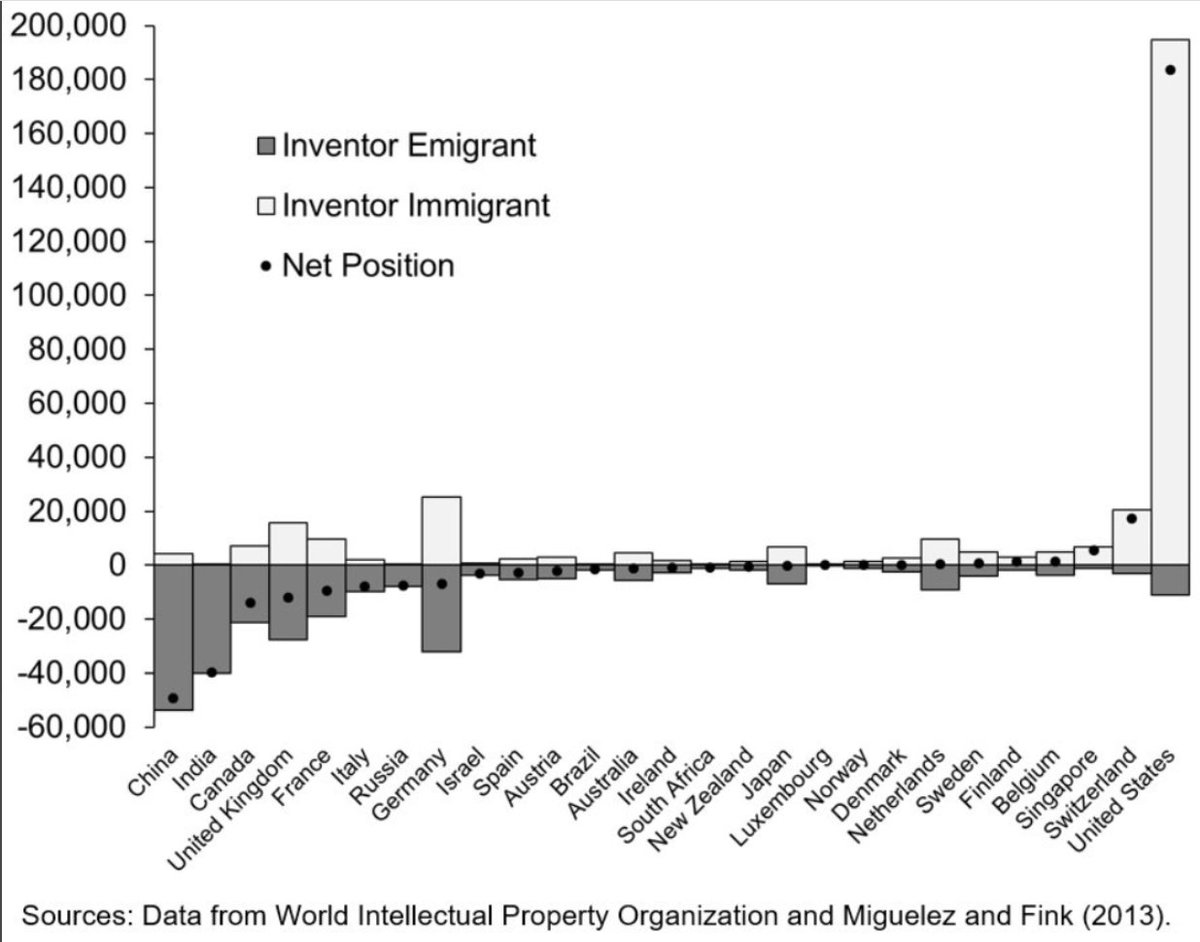

We were unquestionably the best location for scientific research and so most inventors/promising academics wanted to come here.

1) US immigration restrictions are growing more burdensome

2) There are better opportunities to contribute to cutting-edge research at home

https://t.co/EYSUhofYeO

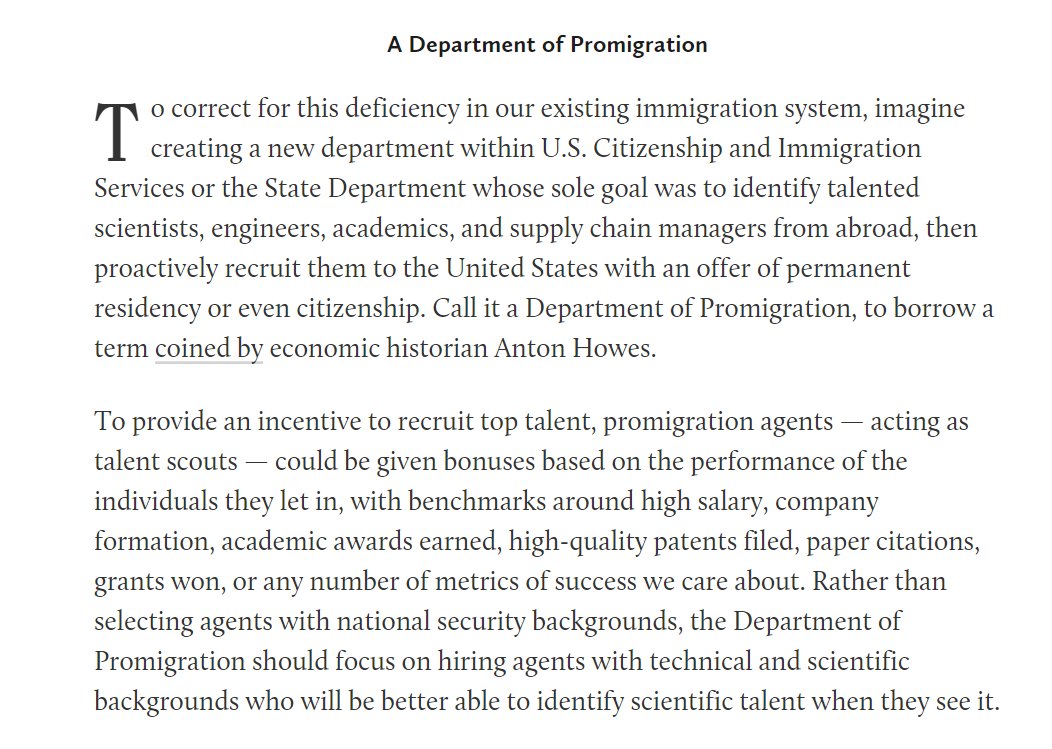

Today we grudgingly let the most talented individuals apply to live here; we do not actively recruit them.

The main job of immigration agents seems to be “avoid letting in terrorists” rather than “maximize the growth potential of the United States.”

We are the R&D lab for the world and we should act like it.

https://t.co/jwGI6exill

More from Tech

The entire discussion around Facebook’s disclosures of what happened in 2016 is very frustrating. No exec stopped any investigations, but there were a lot of heated discussions about what to publish and when.

In the spring and summer of 2016, as reported by the Times, activity we traced to GRU was reported to the FBI. This was the standard model of interaction companies used for nation-state attacks against likely US targeted.

In the Spring of 2017, after a deep dive into the Fake News phenomena, the security team wanted to publish an update that covered what we had learned. At this point, we didn’t have any advertising content or the big IRA cluster, but we did know about the GRU model.

This report when through dozens of edits as different equities were represented. I did not have any meetings with Sheryl on the paper, but I can’t speak to whether she was in the loop with my higher-ups.

In the end, the difficult question of attribution was settled by us pointing to the DNI report instead of saying Russia or GRU directly. In my pre-briefs with members of Congress, I made it clear that we believed this action was GRU.

The story doesn\u2019t say you were told not to... it says you did so without approval and they tried to obfuscate what you found. Is that true?

— Sarah Frier (@sarahfrier) November 15, 2018

In the spring and summer of 2016, as reported by the Times, activity we traced to GRU was reported to the FBI. This was the standard model of interaction companies used for nation-state attacks against likely US targeted.

In the Spring of 2017, after a deep dive into the Fake News phenomena, the security team wanted to publish an update that covered what we had learned. At this point, we didn’t have any advertising content or the big IRA cluster, but we did know about the GRU model.

This report when through dozens of edits as different equities were represented. I did not have any meetings with Sheryl on the paper, but I can’t speak to whether she was in the loop with my higher-ups.

In the end, the difficult question of attribution was settled by us pointing to the DNI report instead of saying Russia or GRU directly. In my pre-briefs with members of Congress, I made it clear that we believed this action was GRU.

THREAD: How is it possible to train a well-performing, advanced Computer Vision model 𝗼𝗻 𝘁𝗵𝗲 𝗖𝗣𝗨? 🤔

At the heart of this lies the most important technique in modern deep learning - transfer learning.

Let's analyze how it

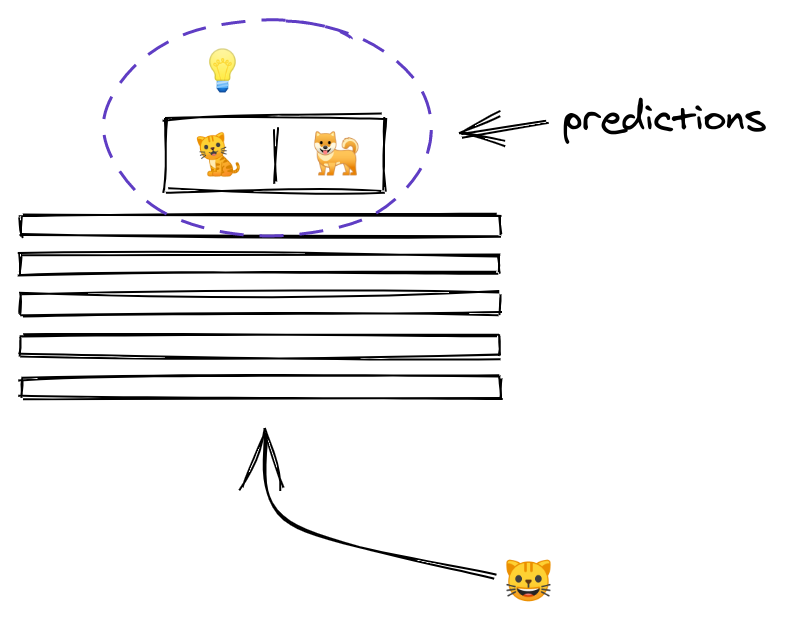

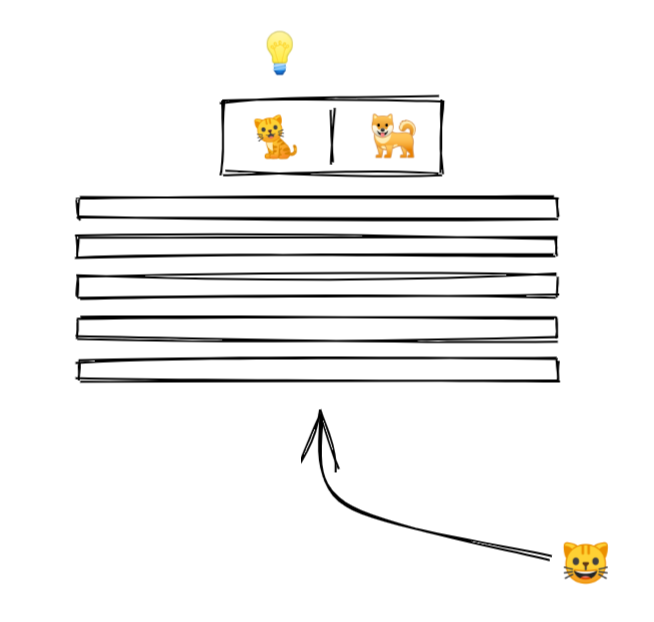

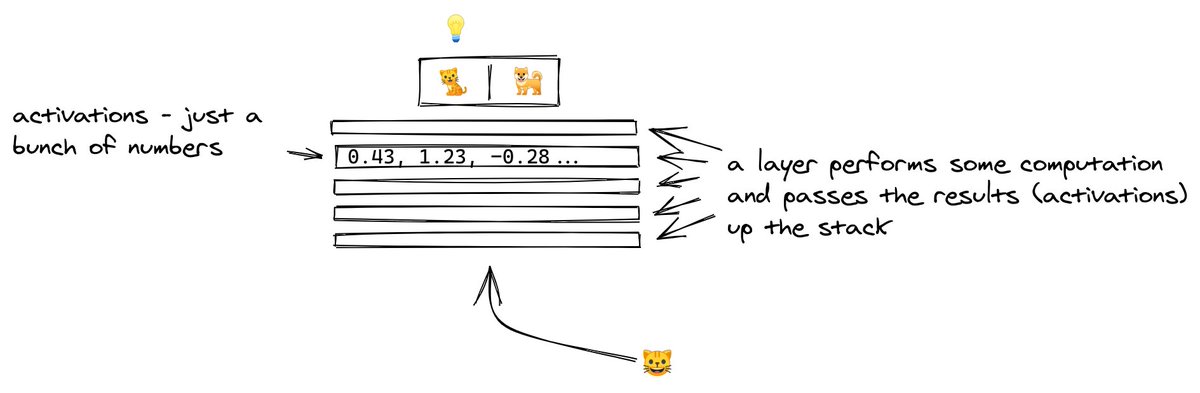

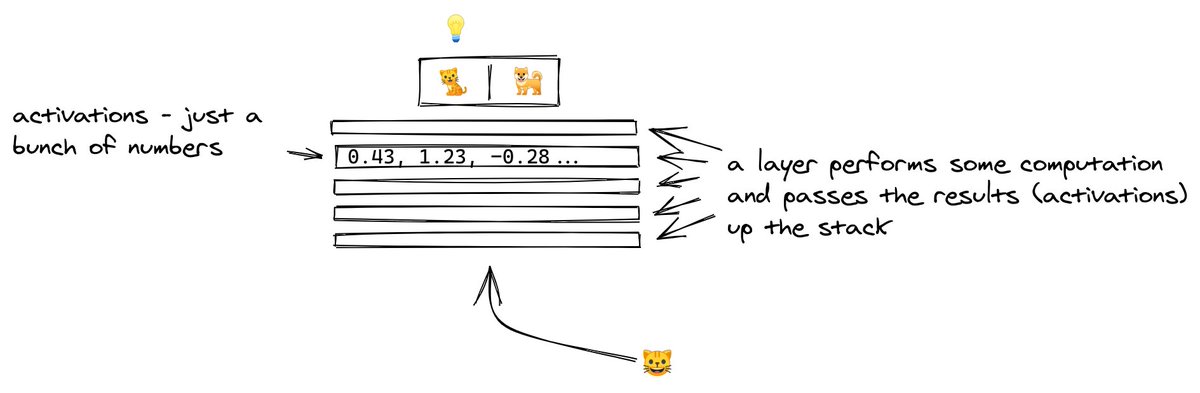

2/ For starters, let's look at what a neural network (NN for short) does.

An NN is like a stack of pancakes, with computation flowing up when we make predictions.

How does it all work?

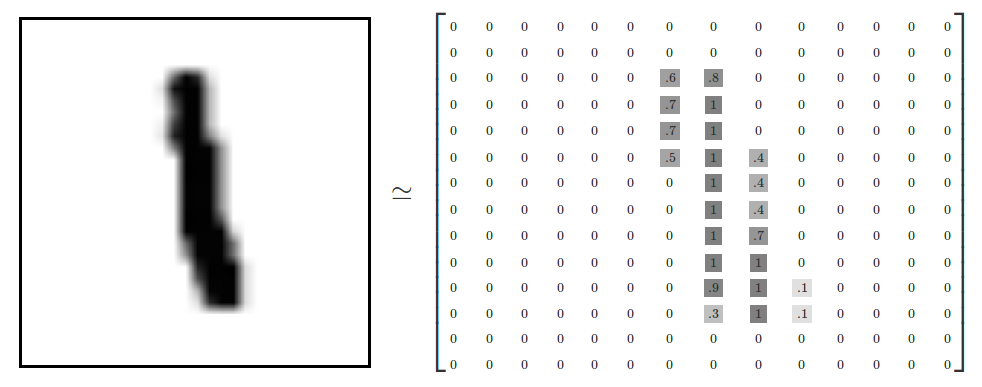

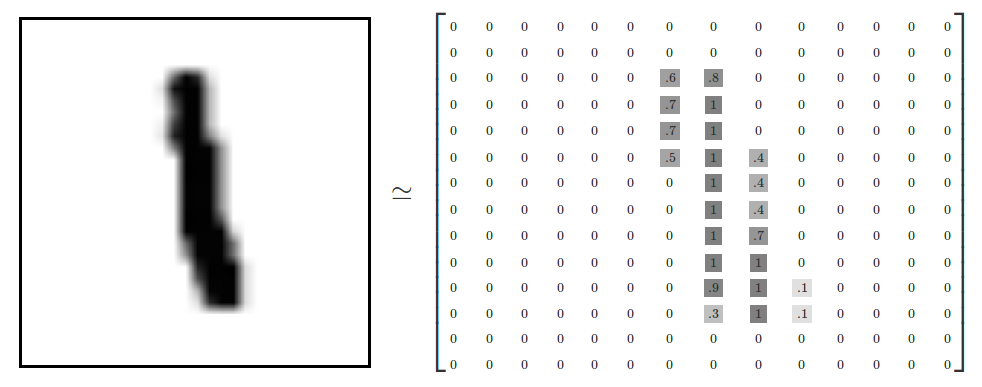

3/ We show an image to our model.

An image is a collection of pixels. Each pixel is just a bunch of numbers describing its color.

Here is what it might look like for a black and white image

4/ The picture goes into the layer at the bottom.

Each layer performs computation on the image, transforming it and passing it upwards.

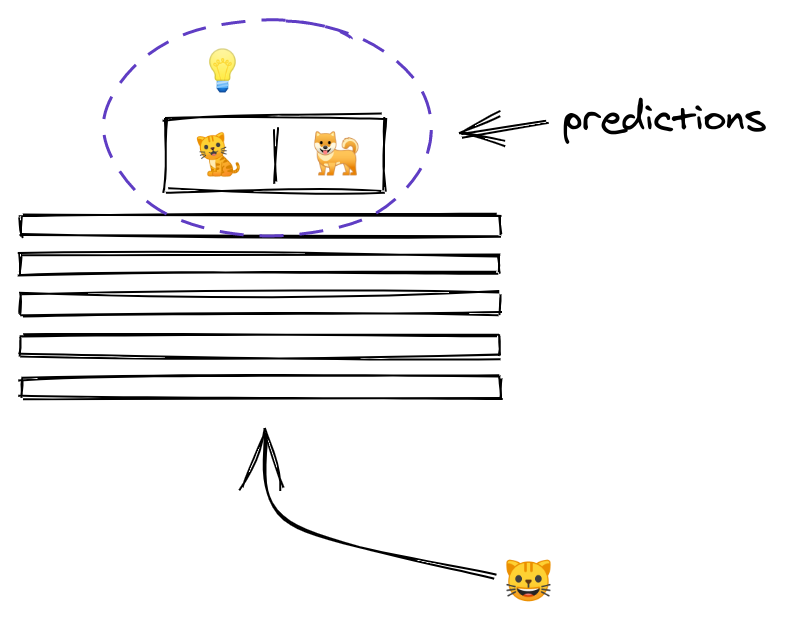

5/ By the time the image reaches the uppermost layer, it has been transformed to the point that it now consists of two numbers only.

The outputs of a layer are called activations, and the outputs of the last layer have a special meaning... they are the predictions!

At the heart of this lies the most important technique in modern deep learning - transfer learning.

Let's analyze how it

THREAD: Can you start learning cutting-edge deep learning without specialized hardware? \U0001f916

— Radek Osmulski (@radekosmulski) February 11, 2021

In this thread, we will train an advanced Computer Vision model on a challenging dataset. \U0001f415\U0001f408 Training completes in 25 minutes on my 3yrs old Ryzen 5 CPU.

Let me show you how...

2/ For starters, let's look at what a neural network (NN for short) does.

An NN is like a stack of pancakes, with computation flowing up when we make predictions.

How does it all work?

3/ We show an image to our model.

An image is a collection of pixels. Each pixel is just a bunch of numbers describing its color.

Here is what it might look like for a black and white image

4/ The picture goes into the layer at the bottom.

Each layer performs computation on the image, transforming it and passing it upwards.

5/ By the time the image reaches the uppermost layer, it has been transformed to the point that it now consists of two numbers only.

The outputs of a layer are called activations, and the outputs of the last layer have a special meaning... they are the predictions!