2/19

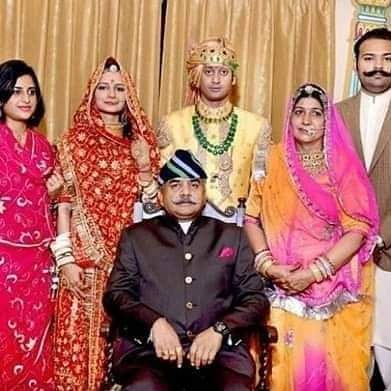

The last Hindu king of Pakistan.

The name Amarkot is known to all in our subcontinent as the birthplace of Emperor Akbar. When Humayun was fleeing from Sher Shah, Rana Prasad of Amarkot sheltered him and this is where Emperor Akbar was born.

#Thread

1/19

@talesofBharat

2/19

But another reason why the name of this Amarkot is inextricably linked with the history of the subcontinent is the partition of the country.

Sodha is the family name of the Maharajas of..

3/19

4/19

After the capture of the fort by the

5/19

6/19

In 1946,Jawaharlal Nehru went to Amarkot to invite the then Maharaja Rana Arjun Singh to join the Congress. At that time Amarkot had a population of 12,000 Hindus which was 90% of the

7/19

8/19

In fact, the reason behind Rana joining the Muslim League was different. The Maharaja of Jodhpur had a perpetual hostile relationship with the Rana of Amarkot from the twelfth century onwards.

9/19

10/19

During the war on the western battlefield in 1971,

11/19

12/19

Rana Chander Singh Sodha is a very conservative person personally and he even adheres to the caste system very well even though he maintained perfect

Harmony

13/19

14/19

15/19

16/19

His son Hamir Singh Sodha was also the Minister of Agriculture in the Sindh Provincial Council and is currently the Member of Parliament for Pakistan.

17/19

More from All

https://t.co/6cRR2B3jBE

Viruses and other pathogens are often studied as stand-alone entities, despite that, in nature, they mostly live in multispecies associations called biofilms—both externally and within the host.

https://t.co/FBfXhUrH5d

Microorganisms in biofilms are enclosed by an extracellular matrix that confers protection and improves survival. Previous studies have shown that viruses can secondarily colonize preexisting biofilms, and viral biofilms have also been described.

...we raise the perspective that CoVs can persistently infect bats due to their association with biofilm structures. This phenomenon potentially provides an optimal environment for nonpathogenic & well-adapted viruses to interact with the host, as well as for viral recombination.

Biofilms can also enhance virion viability in extracellular environments, such as on fomites and in aquatic sediments, allowing viral persistence and dissemination.

Viruses and other pathogens are often studied as stand-alone entities, despite that, in nature, they mostly live in multispecies associations called biofilms—both externally and within the host.

https://t.co/FBfXhUrH5d

Microorganisms in biofilms are enclosed by an extracellular matrix that confers protection and improves survival. Previous studies have shown that viruses can secondarily colonize preexisting biofilms, and viral biofilms have also been described.

...we raise the perspective that CoVs can persistently infect bats due to their association with biofilm structures. This phenomenon potentially provides an optimal environment for nonpathogenic & well-adapted viruses to interact with the host, as well as for viral recombination.

Biofilms can also enhance virion viability in extracellular environments, such as on fomites and in aquatic sediments, allowing viral persistence and dissemination.

You May Also Like

I think a plausible explanation is that whatever Corbyn says or does, his critics will denounce - no matter how much hypocrisy it necessitates.

Corbyn opposes the exploitation of foreign sweatshop-workers - Labour MPs complain he's like Nigel

He speaks up in defence of migrants - Labour MPs whinge that he's not listening to the public's very real concerns about immigration:

He's wrong to prioritise Labour Party members over the public:

He's wrong to prioritise the public over Labour Party

One of the oddest features of the Labour tax row is how raising allowances, which the media allowed the LDs to describe as progressive (in spite of evidence to contrary) through the coalition years, is now seen by everyone as very right wing

— Tom Clark (@prospect_clark) November 2, 2018

Corbyn opposes the exploitation of foreign sweatshop-workers - Labour MPs complain he's like Nigel

He speaks up in defence of migrants - Labour MPs whinge that he's not listening to the public's very real concerns about immigration:

He's wrong to prioritise Labour Party members over the public:

He's wrong to prioritise the public over Labour Party

My top 10 tweets of the year

A thread 👇

https://t.co/xj4js6shhy

https://t.co/b81zoW6u1d

https://t.co/1147it02zs

https://t.co/A7XCU5fC2m

A thread 👇

https://t.co/xj4js6shhy

Entrepreneur\u2019s mind.

— James Clear (@JamesClear) August 22, 2020

Athlete\u2019s body.

Artist\u2019s soul.

https://t.co/b81zoW6u1d

When you choose who to follow on Twitter, you are choosing your future thoughts.

— James Clear (@JamesClear) October 3, 2020

https://t.co/1147it02zs

Working on a problem reduces the fear of it.

— James Clear (@JamesClear) August 30, 2020

It\u2019s hard to fear a problem when you are making progress on it\u2014even if progress is imperfect and slow.

Action relieves anxiety.

https://t.co/A7XCU5fC2m

We often avoid taking action because we think "I need to learn more," but the best way to learn is often by taking action.

— James Clear (@JamesClear) September 23, 2020